Hi, am new to pytorch and am trying to run my model on GPU, the Jupyter notebook successfully detect my GPU

if torch.cuda.is_available():

self.device = torch.device("cuda:0") # you can continue going on here, like cuda:1 cuda:2....etc.

print("Running on the GPU")

torch.cuda.set_device(0)

else:

self.device = torch.device("cpu")

print("Running on the CPU")

Running on the GPU

print(torch.cuda.current_device())

print(torch.cuda.get_device_name(torch.cuda.current_device()))

0

GeForce RTX 2060

and I used .to(device) for the model and its inputs

self.dqn = Network(states_dim, action_dim).to(self.device)

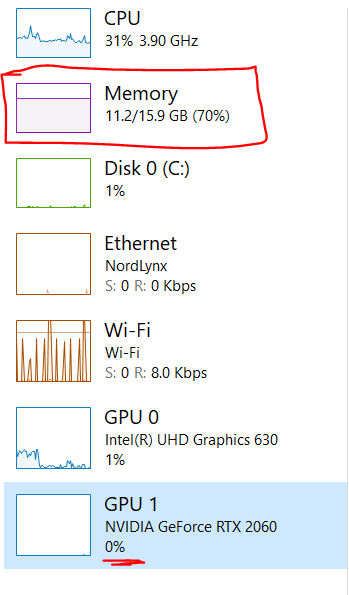

But it is still working on CPU

Thank you for your help.

) or use

) or use