Hello,

I am trained a CycleGAN and converted it to onnx model using part of code below:

net.eval()

net.cuda() # in this example using cuda()

batch_size = 1

input_shape = (3, 512, 512)

export_onnx_file = load_filename[:-4]+".onnx"

save_path = os.path.join(self.save_dir, export_onnx_file)

input_names = ["image"]

output_names = ["pred"]

dinput = torch.randn(batch_size, *input_shape).cuda() #same with net: cuda()

torch.onnx.export(net, dinput, save_path,

input_names = input_names, output_names = output_names,

opset_version = 11,

dynamic_axes={

# 'image' : {0 : 'batch_size'}, # variable length axes

'pred' : {

0:'1',

1:'3',

2:'512',

3:'512'

}})#{0 : 'batch_size'}})

# summary(net, input_shape)

print('The ONNX file ' + export_onnx_file + ' is saved at %s' % save_path)

My goal is use the onnx model in Snap Lens Studio as a lens filter, but everytime I import the model I got the error like this

18:32:04 Resource import for /Users/youjin/Downloads/latest_net_G (6).onnx failed: The ONNX network's output 'pred' dimensions should be non-negative

I printed the input and output shapes and everything looks fine to me tho,

#the last line of torchsummary

Tanh-91 [-1, 3, 512, 512] 0

#print(input.shape)

input shape torch.Size([1, 3, 512, 512])

#print(out.shape)

output shape torch.Size([1, 3, 512, 512])

I googled what ‘negative dimensioin’ implies and found this thread.

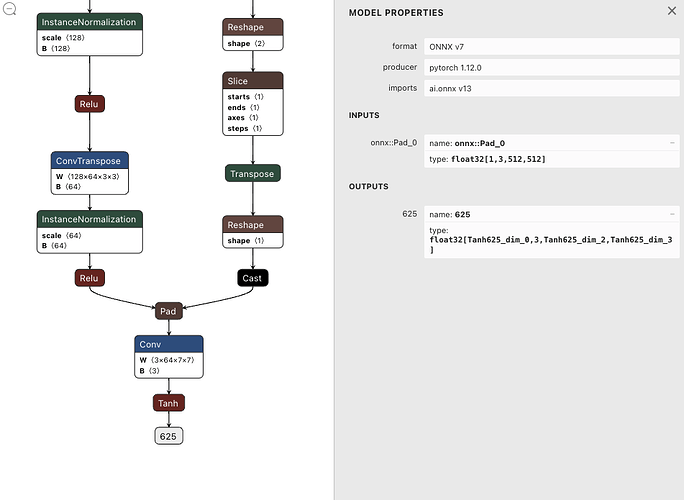

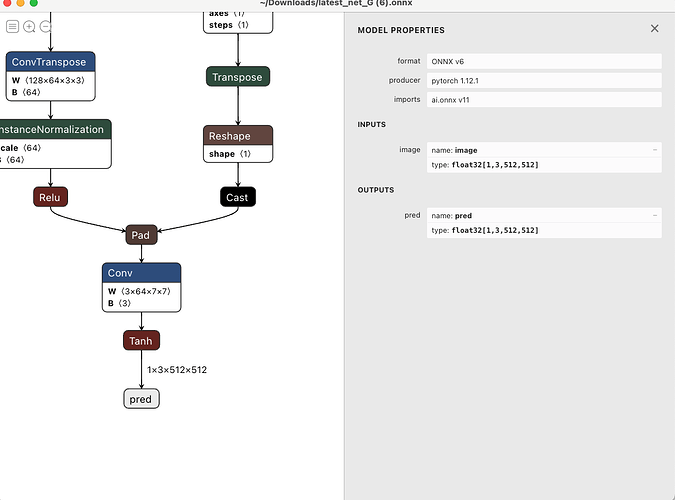

Initially the output shape was not determined when I analyse it with Netron

So I used

dynamic_axes arg of torch.onnx.export to set it [1,3,512,512].After config, It seemed right on Netron

So I thought I fixed the error but I still getting the same error.

I wonder if I can get any help.