Firstly, I put the slicing operation out of “with torch.no_grad()” scope. The loss of the classifier does not decrease and the prediction is wrong.

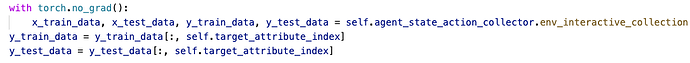

Then, I move the slicing operation into “with torch.no_grad()”. And the classifier behaves normally.

Why does the classifier run abnormally in the first case?