I have a module only has a parameter,as:

class MoR(nn.Module):

def __init__(self,work = False):

super().__init__()

self.sparsity = 0

self.scores = nn.Parameter(torch.tensor(-4.0))

and I usually assert it before the full connection layer.

if I create a new model as:

class LinearModel(nn.Module):

def __init__(self):

super(LinearModel, self).__init__()

self.mor1 = MoR(work = True)

self.relu1 = nn.ReLU() # 添加ReLU激活层

self.linear1 = nn.Linear(1, 1) # 一个简单的线性层

self.mor2 = MoR(work = True)

self.relu2 = nn.ReLU() # 添加ReLU激活层

self.linear2 = nn.Linear(1, 1)

def forward(self, x):

x = self.mor1(x)

x = self.linear1(x)

x = self.relu1(x) # 应用ReLU激活

x = self.mor2(x)

x = self.linear2(x)

x = self.relu2(x) # 应用ReLU激活

return x

It can train normally.

But if I insert it into the LLM,as:

class MoRMlp(nn.Module):

def __init__(self, mlp:LlamaMLP):

super().__init__()

self.gate_proj = mlp.gate_proj

self.down_proj = mlp.down_proj

self.up_proj = mlp.up_proj

self.act_fn = mlp.act_fn

self.input_mor = MoR()

self.down_mor = MoR()

self.is_train = False

def forward(self,x):

x = self.input_mor(x)

hidden_states = self.gate_proj(x)

hidden_states = self.act_fn(hidden_states)

hidden_states = hidden_states * self.up_proj(x)

hidden_states = self.down_mor(hidden_states)

hidden_states = self.down_proj(hidden_states)

return hidden_states

It can’t train.

I 'm so confused that the threshold computed by simoid , whose requres_grad is false.

as

class MoR(nn.Module):

def __init__(self,work = False):

super().__init__()

self.sparsity = 0

self.scores = nn.Parameter(torch.tensor(-4.0))

self.f = torch.sigmoid

self.threshold = self.f(self.scores)

self.min_loss = float('inf')

self.sparsity_best = 0 # used for training

self.sparsity_loss = 0

self.recon_loss = 0

self.work = work

self.num = 0

self.sparsity_avg = 0

def forward(self,inputs:torch.Tensor):

if self.work:

# get mask

mask = self.generate_mask(inputs)

if torch.sum(torch.isnan(inputs)).bool():

import pdb

pdb.set_trace()

self.get_sparsity(inputs)

self.update_sparsity_avg()

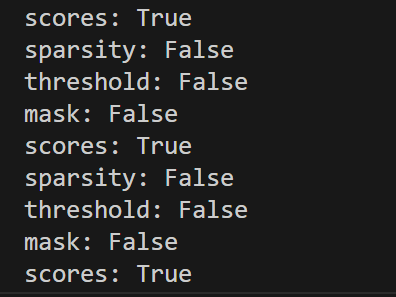

print("mask:", mask.requires_grad)

print("scores:", self.scores.requires_grad)

print("sparsity:", self.sparsity.requires_grad)

print("threshold:", self.threshold.requires_grad)

return inputs * mask

the result is :

I need a favor. Thank you