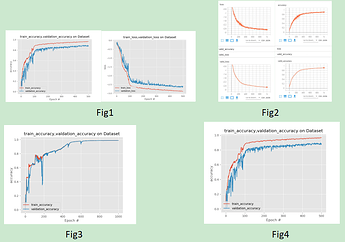

Hi,i am newer in pytorch,i find that using same data for train and validaiton,train and validation reuslt have big gap,fig1

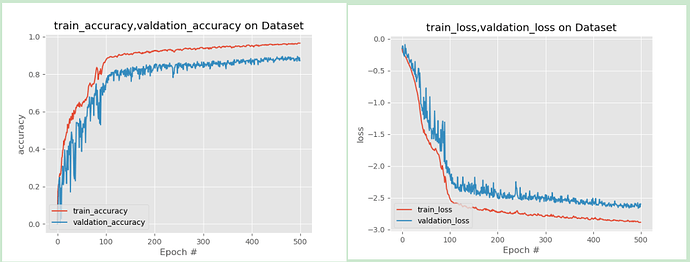

i use same params to do it with tensorflow,train and validation result is same,fig2

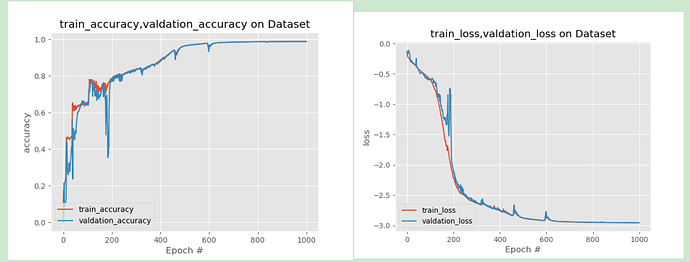

at same time,i do another experiment,using same data with only one data for train and validation,fig3

and using same data with five data for train and validaiton,fig4

It’s strange why there are different results,

here is my project:GitHub - junqiangchen/PytorchDeepLearing: Meidcal Image Segmentation Pytorch Version

Could you explain the issue in more details and also post the figure separately with own descriptions as it’s currently hard to tell which values the curves have (e.g. it’s unclear to me if the losses in Fig. 2 are different or equal).

Also, I don’t understand the difference between Fig. 1 and 3 as apparently both are using “same data for train and validation”.

BN, Dropout, and other ops perform in a different manner during training and testing may cause this problem. Fix them and try again?

i want use UNet3d to segmt mutil target,but i find the validation result always lower than traing result,

so i do some experiments,

first one that is using same datas with 5 volume dataset for train and validation,but the loss and accuracy have some gap distance,like this:

another one is that using only one volume dataset for train and validation,the loss and accuracy is same,like this:

so it’s strange why there have different size dataset for train and validation,the train and validation is different.

ps:the network is Unet3d,using BN layer,loss is mutil dice.

thank you!!

in Unet3d only use BN,ops is Adam with default params

i find the problem,the BN is not stable when batchsize is too small,so i change BN to GN,it’s same.