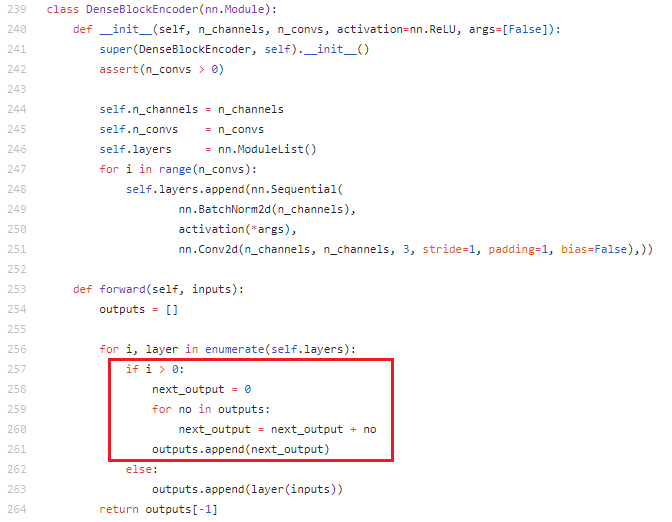

I recently referred to a kind of dense block implementation (not in a classic way), but what puzzles me is his use of nn.ModuleList. His implementation is shown below.

I think, just the first nn.Sequential in the layers (nn.ModuleList) is used, is right?Look forward replying to help me understand it. Thanks!

link is here: DeformingAutoencoders-pytorch/DAENet.py at master · zhixinshu/DeformingAutoencoders-pytorch · GitHub

I’m not familiar with the code base and am not sure, how the model is supposed to work, but you could use forward hooks to check, which layer is being called:

model = DenseBlockEncoder(1, 3)

def print_layer(name):

def hook(model, input, output):

print("{} called".format(name))

return hook

for idx in range(len(model.layers)):

model.layers[idx].register_forward_hook(print_layer(str(idx)))

x = torch.randn(1, 1, 4, 4)

model(x)

> 0 called

In this example, only the first sequential block is being called.

Thank you so much! That means what I thought is right.