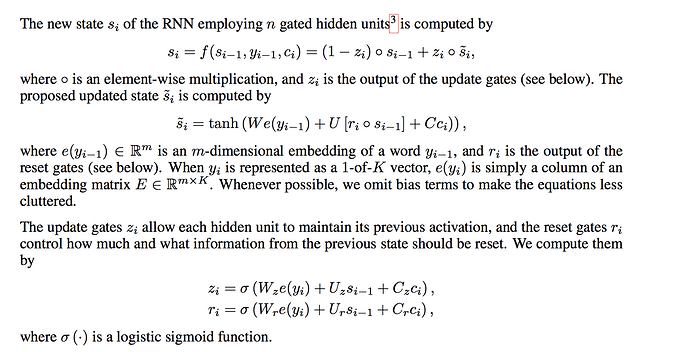

For the ‘ml align and translate’ paper. I know they use a GRU, whereas I’m just using some generic non-gated RNN, for simplicity. But, just pondering how to integrate the context vector into a standard rnn? They write:

I’m thinking fo doing something like:

class Decoder(object):

def forward(self, input, context, state):

return self.rnn(input + self.W @ context, state)

This way I can just reuse existing RNN classes.

Thoughts?