Hello,

I can’t believe how long it took me to get an LSTM to work in PyTorch

and

Still I can’t believe I have not done my work in Pytorch though.

My final goal is make time-series prediction LSTM model

- not just one step prediction but Multistep prediction model

- So it should successfully predict Recursive Prediction

- The dataset is Power Consumption dataset (http://www.cs.ucr.edu/~eamonn/discords/)

- So I can implement it to the Anomaly Detection (My final final final Goal)(https://www.elen.ucl.ac.be/Proceedings/esann/esannpdf/es2015-56.pdf)

I created my custom dataset referenced by https://discuss.pytorch.org/t/understanding-lstm-input/31110

and make LSTM model using scheduled sampling - https://arxiv.org/abs/1506.03099

decay schedules is Linear method.

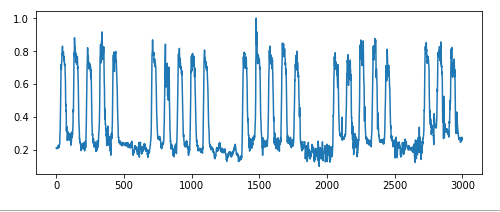

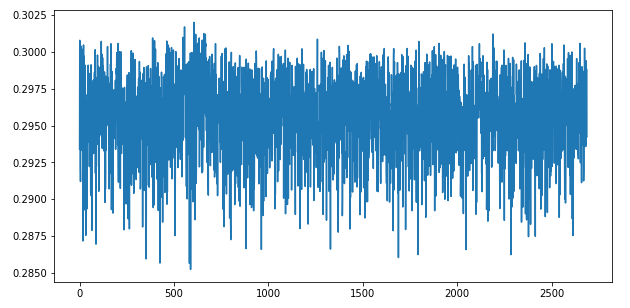

The problem is, rather than making multistep predictions, my process has been blocked from the training stage. I plotted the prediction value obtained from the training, the result was such a mess just a MESS.

I referenced many git hub codes including official torch github (https://github.com/pytorch/examples/tree/master/time_sequence_prediction, https://github.com/chickenbestlover/RNN-Time-series-Anomaly-Detection) to solve this problem and have seen a lot of topic related to LSTM, but I could not solve my problem. Even I sent an e-mail to the author of the git hub code, but he also said that he did not complete the multistep prediction for power demand dataset.

From http://kth.diva-portal.org/smash/record.jsf?pid=diva2%3A1149130&dswid=3859,

the Stateful LSTM method should be used because the dataset such as power demand has a long weekly cycle. So I tried various attempts to maintain the hidden state and cell state as 0 which means not to initialize it.

I’ve been on this for over a month and I’m so exhausted. I want to upload all the codes in my mind, but because it gets too long, I upload the code of the training part and the lstm model code first. In addition, if the other parts of code are needed, I can upload more. I need the help from anyone who know about lstm well.

def train_data(model, lookahead, hidden_, cell_, dataloader, epoch, batch_size, device):

model.train()total_loss = 0 pred_list = [] realY_list = [] optimizer = optim.Adam(model.parameters(), lr=learning_rate) for iteration, (X, y) in enumerate(dataloader): iter_pred = [] # get output from teacher forcing or free learning train optimizer.zero_grad() X = X.transpose(0, 1).float().to(device) y_true = y.float().to(device) model_out, hidden_, cell_ = model(X, hidden_, cell_) iter_pred.append(model_out) for i in range(lookahead-1): '''For scheduled sampling (Teacher Forcing or Free Learning)''' '''Select T or F''' population, weights = get_population_weight(args, epoch) teacher_or_free = choice(population, weights) # print("Teacher or Free =>", teacher_or_free) if teacher_or_free == "T": y_teacher = y_true[:, i].reshape(1, batch_size, 1) model_out, hidden_, cell_ = model(y_teacher, hidden_, cell_) iter_pred.append(model_out) elif teacher_or_free == "F": y_free = model_out.reshape(1, batch_size, 1) model_out, hidden_, cell_ = model(y_free, hidden_, cell_) iter_pred.append(model_out) y_pred = torch.cat(iter_pred, dim=0) y_true = y_true.view(-1) loss = loss_function(y_pred, y_true) loss.backward() optimizer.step() total_loss += loss pred_list.append(y_pred) realY_list.append(y_true) print(epoch, "epoch") print("loss =", total_loss) return realY_list, pred_list, y_pred, hidden_, cell_class LSTM(nn.Module):

def __init__(self, input_dim, hidden_dim, output_dim, batch_size, num_layers, dropout, use_bn, device): super(LSTM, self).__init__() self.input_dim = input_dim self.hidden_dim = hidden_dim self.output_dim = output_dim self.batch_size = batch_size self.num_layers = num_layers self.device = device self.dropout = dropout self.use_bn = use_bn # Define the LSTM layer self.lstm = nn.LSTM(self.input_dim, self.hidden_dim, self.num_layers) # Define the output layer self.regressor = self.make_regressor() def make_regressor(self): layers = [] if self.use_bn: layers.append(nn.BatchNorm1d(self.hidden_dim)) layers.append(nn.Dropout(self.dropout)) layers.append(nn.Linear(self.hidden_dim, self.hidden_dim // 2)) layers.append(nn.ReLU()) layers.append(nn.Linear(self.hidden_dim // 2, self.output_dim)) regressor = nn.Sequential(*layers) return regressor def forward(self, lstm_input, hidden, cell): hidden_ = hidden.detach() cell_ = cell.detach() y_pred, (hidden_out, cell_out) = self.lstm(lstm_input, (hidden_, cell_)) lstm_out = y_pred y_pred = self.regressor(lstm_out[-1].view(self.batch_size, -1)) return y_pred, hidden_out, cell_out

ANY help would be appreciated…Thanks…