Hi @ptrblck, thanks for the quick response! My CPU memory leak here is quite weird to explain, I did briefly expand upon this topic with a new, more specific topic (CPU Memory leak but only when running on specific machine). Which I could merge together with this topic if that makes more sense?

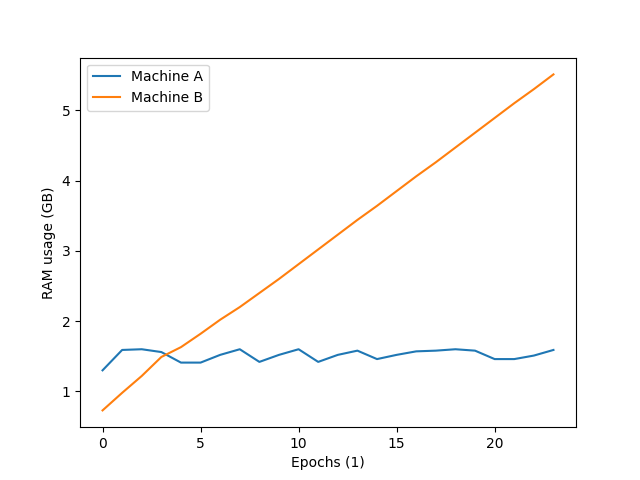

But in short, when I run my code on one machine (let’s say machine B) the memory usage slowly increases by around (200mb to 400mb) per epoch, however, running the same code on a different machine (machine A) doesn’t result in a memory leak at all. I’ve just rechecked this now to make sure I’m not doing some stupid but I’ve ran the same code (code extract from the same .tar.gz file) on two different machines and I get one machine leaking memory and the other one doesn’t. I’ve attached a graph to give a more visual comparison

Given both machines are running the same scripts, the only difference I can think of are environments and perhaps OS? Machine A is running Ubuntu 18.04 in a Conda environment (with pytorch 1.7.1 and cuda 11) Machine B is running Ubuntu 20.04 in a pip environment (with PyTorch 1.8.1+cpu). I did try running Machine B with a conda environment but I got a similar memory leak. Assuming it is an actual leak and not something within my script that stores a computational graph within a container (like you said) but if that were the case, surely it’d happen across both machines?

Thank you for the help! ![]()