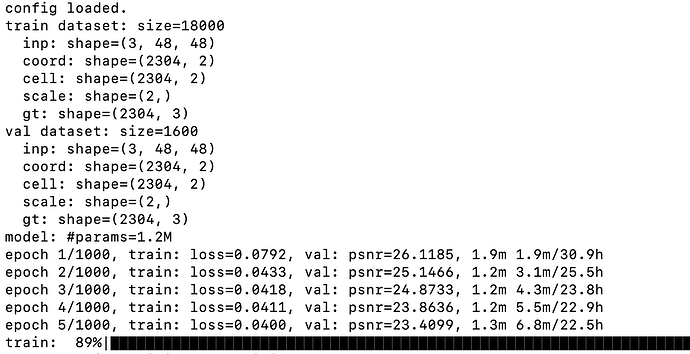

I encountered that after adding torch.compile(model), the performance of the model on the verification set has been declining as the training progresses.

When I remove torch.compile(model), the model is back to normal:

epoch 1/1000, train: loss=0.0767, val: psnr=27.5813, 1.4m 1.4m/24.2h

epoch 2/1000, train: loss=0.0438, val: psnr=28.2431, 1.6m 3.0m/25.3h

epoch 3/1000, train: loss=0.0428, val: psnr=28.2909, 1.6m 4.6m/25.5h

epoch 4/1000, train: loss=0.0417, val: psnr=28.4744, 1.6m 6.2m/25.9h

epoch 5/1000, train: loss=0.0406, val: psnr=28.7785, 1.6m 7.8m/26.1h

epoch 6/1000, train: loss=0.0404, val: psnr=28.5917, 1.6m 9.5m/26.3h

epoch 7/1000, train: loss=0.0395, val: psnr=28.6923, 1.6m 11.1m/26.3h

epoch 8/1000, train: loss=0.0393, val: psnr=28.9986, 1.5m 12.6m/26.2h

epoch 9/1000, train: loss=0.0382, val: psnr=28.8534, 1.7m 14.2m/26.4h

I work with torch2.0+cuda11.8+cudnn8700, RTX 4090

Do you have a repro you can share?

In the meantime here are some things you can try

- Randomness is different across inductor and torch eager, so set this flag to false pytorch/config.py at main · pytorch/pytorch · GitHub

- Try using the nightlies, some recent PRs were added to improve determinism https://pytorch.org/

- If neither of those two things work then it’s likely there’s a bug with some specific operation and you can run the accuracy minifier to point it out PyTorch 2.0 Troubleshooting — PyTorch master documentation

`TORCHDYNAMO_REPRO_AFTER="dynamo" TORCHDYNAMO_REPRO_LEVEL=4`

`TORCHDYNAMO_REPRO_AFTER="aot" TORCHDYNAMO_REPRO_LEVEL=4`

Those two things do not work. You can reproduce the similar result with this repro.

You can implement with the simple case, by adding the model = torch.compile(model) below here.

After training 30 epochs, the model begin to test. If you add the torch.compile, the test accuracy will not increase. While for the version without torch.compile is normal.

Here is the requirements.txt here.

So this doesn’t get lost do you mind x-posting to Issues · pytorch/pytorch · GitHub - an oncall can help take a look

Could you reproduce the same error?