Hi All,

We are stuck with pytorch installation on server. Below are the collect.py details:

(collect.py reference : https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py)

(gpu_env) python collect.py

Collecting environment information…

/opt/platformx/sentiment_analysis/gpu_env/lib64/python3.8/site-packages/torch/cuda/init.py:82: UserWarning: CUDA initialization: CUDA driver initialization failed, you might not have a CUDA gpu. (Triggered internally at …/c10/cuda/CUDAFunctions.cpp:112.)

return torch._C._cuda_getDeviceCount() > 0

PyTorch version: 1.11.0+cu113

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: Red Hat Enterprise Linux 8.6 (Ootpa) (x86_64)

GCC version: (GCC) 8.5.0 20210514 (Red Hat 8.5.0-10)

Clang version: Could not collect

CMake version: Could not collect

Libc version: glibc-2.28

Python version: 3.8.12 (default, Sep 16 2021, 10:46:05) [GCC 8.5.0 20210514 (Red Hat 8.5.0-3)] (64-bit runtime)

Python platform: Linux-4.18.0-372.13.1.el8_6.x86_64-x86_64-with-glibc2.2.5

Is CUDA available: False

CUDA runtime version: 11.4.48

GPU models and configuration: GPU 0: GRID M6-4Q

Nvidia driver version: 470.82.01

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

Versions of relevant libraries:

[pip3] numpy==1.23.1

[pip3] torch==1.11.0+cu113

[conda] Could not collect

What all we have tried:

-Installing torch==1.11.0+cu113, torch==1.12.0+cu113, torch==1.11.0+cu102, torch==1.12.0+cu102.

-Installing from .whl files for python 3.8 and cu113

-Upgrading pip and pip3

-Tried a fresh virtual enviroenment.

We know two other ways, but not sure if it would work:

- Downgrading CUDA version from 11.4 to 11.3

- Building pytroch for CUDA 11.4 from source.

We cannot use Anaconda as well, only pip is allowed.

The above methods require sudo permissions that we don’t have. So, it would be better if anyone can suggest alternatives or better solutions.

Thanks

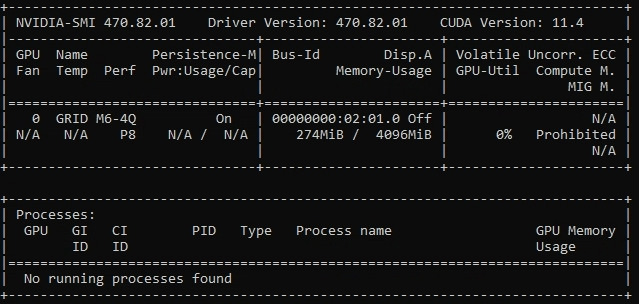

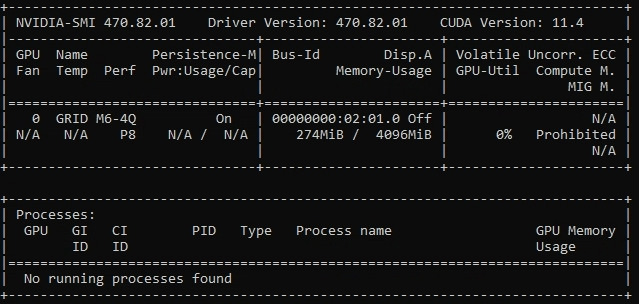

nvidia-smi output :

PyTorch is unable to communicate with the GPU as its initialization is failing:

UserWarning: CUDA initialization: CUDA driver initialization failed, you might not have a CUDA gpu.

In case you have installed the drivers recently, make sure to reboot the node.

Thanks for the reply,

We tried restarting the server, but it still shows the same issue.

Any other way?

I had the same issue with CUDA version 11.4. torch.cuda.is_available() gives True. But the gpu is not recognised when used with pytorch-lightning.

One thing different than the original question parameters was that I had access to conda. If somebody is looking for a possible solution:

- I created a fresh conda environment.

- In that environment, I installed torch, torchvision and cudatoolkit for CUDA 11.3

# CUDA 11.3

pip install torch==1.11.0+cu113 torchvision==0.12.0+cu113 torchaudio==0.11.0 --extra-index-url https://download.pytorch.org/whl/cu113

- The rest of the packages, i installed via pip in the same environment.

pip install -r requirements.txt

This solved the issue for me.  . The gpu is being used now.

. The gpu is being used now.

I still encounter the torch.cuda.is_available() is False for CUDA version 11.4 on Jetson Orin NX 16GB platform

Based on the instruction of pytorch.org https://pytorch.org/get-started/previous-versions/, I use the following command to install torch and torchvision in miniconda virtual environment:

conda install pytorch==1.12.1 torchvision==0.13.1 cudatoolkit=11.3 -c pytorch -c conda-forge

But from the result of collect_env.py, it seems that the installed version of torch and torchvision is CPU version. The env collected result as follows:

PyTorch version: 1.12.1

Is debug build: False

CUDA used to build PyTorch: Could not collect

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.6 LTS (aarch64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: version 3.16.3

Libc version: glibc-2.31

Python version: 3.8.16 (default, Mar 2 2023, 03:16:31) [GCC 11.2.0] (64-bit

runtime)

Python platform: Linux-5.10.104-tegra-aarch64-with-glibc2.26

Is CUDA available: False

CUDA runtime version: 11.4.315

CUDA_MODULE_LOADING set to: N/A

GPU models and configuration: Could not collect

Nvidia driver version: Could not collect

cuDNN version: Probably one of the following:

/usr/lib/aarch64-linux-gnu/libcudnn.so.8.6.0

/usr/lib/aarch64-linux-gnu/libcudnn_adv_infer.so.8.6.0

/usr/lib/aarch64-linux-gnu/libcudnn_adv_train.so.8.6.0

/usr/lib/aarch64-linux-gnu/libcudnn_cnn_infer.so.8.6.0

/usr/lib/aarch64-linux-gnu/libcudnn_cnn_train.so.8.6.0

/usr/lib/aarch64-linux-gnu/libcudnn_ops_infer.so.8.6.0

/usr/lib/aarch64-linux-gnu/libcudnn_ops_train.so.8.6.0

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: aarch64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 8

On-line CPU(s) list: 0-7

Thread(s) per core: 1

Core(s) per socket: 4

Socket(s): 2

Vendor ID: ARM

Model: 1

Model name: ARMv8 Processor rev 1 (v8l)

Stepping: r0p1

CPU max MHz: 1984.0000

CPU min MHz: 115.2000

BogoMIPS: 62.50

L1d cache: 512 KiB

L1i cache: 512 KiB

L2 cache: 2 MiB

L3 cache: 4 MiB

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Spec store bypass: Mitigation; Speculative Store Bypass disabled via

prctl

Vulnerability Spectre v1: Mitigation; __user pointer sanitization

Vulnerability Spectre v2: Not affected

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Not affected

Flags: fp asimd evtstrm aes pmull sha1 sha2 crc32 atomics fphp

asimdhp cpuid asimdrdm lrcpc dcpop asimddp uscat ilrcpc flagm

Versions of relevant libraries:

[pip3] numpy==1.21.0

[pip3] torch==1.12.1

[pip3] torchvision==0.13.1a0

[conda] cudatoolkit 11.3.1 h89cd5c7_10 conda-forge

[conda] numpy 1.21.3 py38hb9da153_0 conda-forge

[conda] pytorch 1.12.1 cpu_py38ha09e9da_1

[conda] torchvision 0.13.1 cpu_py38heb4ea19_0

Is there any experience to resolve this issue?

For Jetson you would have to use the custom wheels from NVIDIA which you can find e.g. here and here.

Is there any success experience to porting Yolov5 on Jetson Orin NX 16GB with torch.cuda.is_available true?

Actually what make me to adopt the pytorch.org instruction is version mismatch problem between torch and torchvision after following the instruction from nvidia developer forums pytorch-for-jetson/72048 and nvidia doc install-pytorch-jetson-platform/index.html. I also tried the pytorch docker in NVIDIA L4T PyTorch | NVIDIA NGC which can get torch.cuda.is_available() return true but encounter another trouble to use camera in docker.

Before running the yolov5 code, I am not sure whether I still need to adjust the source code of torch module in upsampling.py of toch2.0 in l4t-pytorch docker image. Upsampling.py need some modification in torch 1.12.1 if in Yolov5 usage scenario.

The code of my yolov5 project has been successfully run on Jetson Nano after following the pytorch and tochvison installation instruction from q-engineering qengineering.eu/install-pytorch-on-jetson-nano.html but it is not for Jetson Orin NX. The code can also run on Server with RTX3080Ti.

The issue with the binaries posted on PyTorch.org is that no Jetson binaries are available as the ARM build should be for Macs and thus does not support CUDA. I don’t know if modifications are needed to run Yolo.

Update for test result of yolov5 on jetson Orin NX 16GB with Jetpack 5.1.1.

Following the instruction of nvidia developer forums, I select Pytorch 1.13.0 and tochvision 0.13.0 build wheel. Except small modification of torch upsampling.py and torchvision qnms_kernel.cpp, yolov5 is run as expected performance on the Orin NX 16GB with torch.cuda.is_available true.

Thanks @ptrblck

1 Like

Great, thanks for sharing the outcome!