I’ve combed through a few forum posts on this topic, but none of the solutions I’ve seen have worked. I installed PyTorch to my environment with the following command: pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu124.

Output from ‘torch.__version__’:

2.4.0+cpu

Output from ‘nvcc --version’:

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2024 NVIDIA Corporation

Built on Tue_Feb_27_16:28:36_Pacific_Standard_Time_2024

Cuda compilation tools, release 12.4, V12.4.99

Build cuda_12.4.r12.4/compiler.33961263_0

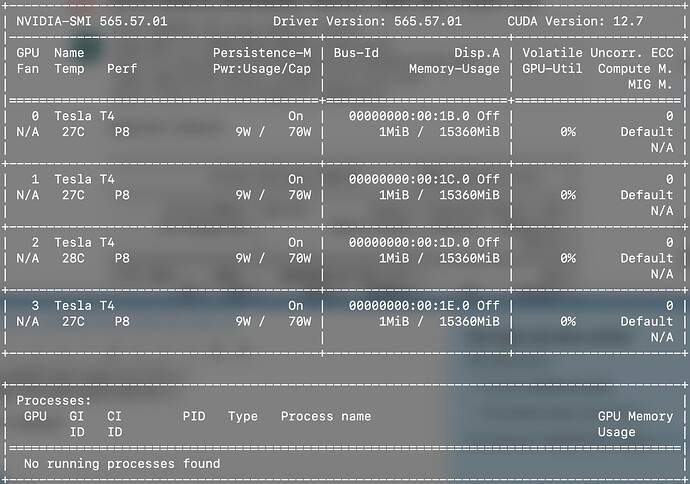

Output from ‘nvidia-smi’:

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 560.94 Driver Version: 560.94 CUDA Version: 12.6 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Driver-Model | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce RTX 3050 ... WDDM | 00000000:01:00.0 Off | N/A |

| N/A 45C P0 9W / 40W | 0MiB / 4096MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

I do not know why one of these shows 12.4 and the other shows 12.6.

I tried a fresh install of both torch and of the CUDA toolkit, neither of which had any effect. I saw in one forum post that rolling torch’s supported CUDA version back to 12.1 if you have 12.6 installed, but this did not work either. Am I missing something obvious?