Hi there.

I’m trying to run a version of the new Llama3 model.

Specifically this one NurtureAI/Meta-Llama-3-8B-Instruct-64k-GGUF · Hugging Face

When I run the code in the example, or modify the messages a bit, all works fine.

If I give a very large message ( let’s say some HTML code ) I get this error :

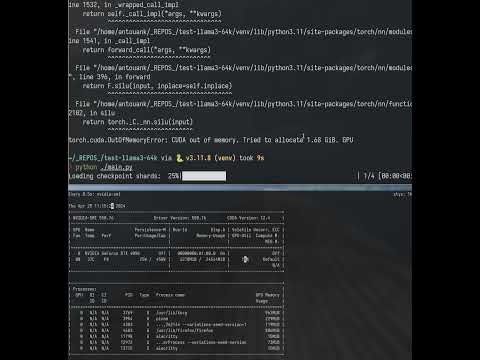

python ./main.py

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Loading checkpoint shards: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████| 4/4 [00:05<00:00, 1.36s/it]

The attention mask and the pad token id were not set. As a consequence, you may observe unexpected behavior. Please pass your input's `attention_mask` to obtain reliable results.

Setting `pad_token_id` to `eos_token_id`:128001 for open-end generation.

Traceback (most recent call last):

File "/home/antouank/_REPOS_/test-llama3-64k/./main.py", line 1439, in <module>

outputs = model.generate(

^^^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/torch/utils/_contextlib.py", line 115, in decorate_context

return func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/transformers/generation/utils.py", line 1622, in generate

result = self._sample(

^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/transformers/generation/utils.py", line 2791, in _sample

outputs = self(

^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/torch/nn/modules/module.py", line 1532, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/torch/nn/modules/module.py", line 1541, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py", line 1208, in forward

outputs = self.model(

^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/torch/nn/modules/module.py", line 1532, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/torch/nn/modules/module.py", line 1541, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py", line 1018, in forward

layer_outputs = decoder_layer(

^^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/torch/nn/modules/module.py", line 1532, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/torch/nn/modules/module.py", line 1541, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py", line 756, in forward

hidden_states = self.mlp(hidden_states)

^^^^^^^^^^^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/torch/nn/modules/module.py", line 1532, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/torch/nn/modules/module.py", line 1541, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py", line 240, in forward

down_proj = self.down_proj(self.act_fn(self.gate_proj(x)) * self.up_proj(x))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/torch/nn/modules/module.py", line 1532, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/torch/nn/modules/module.py", line 1541, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/torch/nn/modules/activation.py", line 396, in forward

return F.silu(input, inplace=self.inplace)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/antouank/_REPOS_/test-llama3-64k/venv/lib/python3.11/site-packages/torch/nn/functional.py", line 2102, in silu

return torch._C._nn.silu(input)

^^^^^^^^^^^^^^^^^^^^^^^^

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 1.68 GiB. GPU

my GPU is a 4090 and for sure has more free VRAM than 1.68! So what’s the issue here?

How can I make it allow large messages?

I tried googling this but I cannot find any similar reference anywhere.

Thank you.