that is the whole output. there’s nothing “cut”, unless I misunderstood what you say.

so a larger prompt can affect the memory needs in GBs scale??

I didn’t expect that.

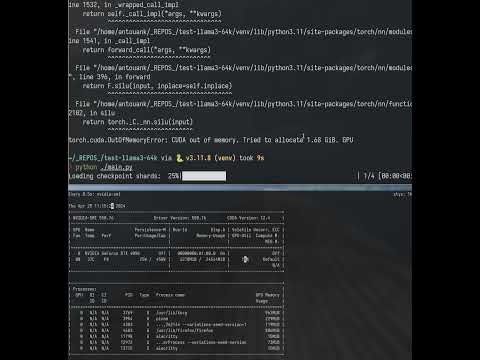

I made a video, it seems like it does fill up the VRAM ( look at the nvidia-smi output below )

Is there a way to offload some to the RAM?

And how can I calculate what’s the max prompt it can handle with ~22GB of VRAM?

I’m baffled it works with a prompt of 500 lines but not with a prompt of 1500 lines. ( basically I’m just giving it an HTML page as input, it’s not that much tokens per line )