Hello! I am running into a memory issue that I think I shouldn’t be having with 24 GB of VRAM. I am trying to train Mask R-CNN and find that directly after the first forward call, the allocated memory explodes in size to ~20GB, which I don’t expect since the model is ~200MB and the batch is ~300MB. Then when I call loss.backward() the allocated memory exceeds the boundaries of the GPU and the code crashes. See the code snippet below. What am I doing wrong here?

I have tested a few different settings for PYTORCH_CUDA_ALLOC_CONF and it did help a bit, but it didn’t solve the problem completely.

import torch

from torchvision.models.detection import maskrcnn_resnet50_fpn, MaskRCNN_ResNet50_FPN_Weights

batch_size = 36

img_size = 800

objects_per_image = 10

device = torch.device("cuda")

model = maskrcnn_resnet50_fpn(weights=MaskRCNN_ResNet50_FPN_Weights.DEFAULT)

model = model.to(device)

print('Memory allocated: ', torch.cuda.max_memory_allocated() * 1e-9, 'GB,', 'memory reserved: ', torch.cuda.max_memory_reserved()*1e-9, 'GB')

# Memory allocated: 0.181971456 GB, memory reserved: 0.20132659200000003 GB

x = [torch.rand((3, img_size, img_size), device=device)] * batch_size

y = [{'boxes': torch.tensor([1, 2, 3, 4], device=device, dtype=torch.int64).repeat(40, 1),

'labels': torch.ones((objects_per_image), device=device, dtype=torch.int64),

'masks': torch.randint(img_size, size=(objects_per_image, 1, img_size, img_size), dtype=torch.int64)

}] * batch_size

print('Memory usage of objects in batch (GB):')

print('\timgs: ', x[0].element_size() * x[0].nelement() * batch_size * 1e-9)

print('\tboxes: ', y[0]['boxes'].element_size() * y[0]['boxes'].nelement() * batch_size * 1e-9)

print('\tlabels: ', y[0]['labels'].element_size() * y[0]['labels'].nelement() * batch_size * 1e-9)

print('\tmasks: ', y[0]['masks'].element_size() * y[0]['masks'].nelement() * batch_size * 1e-9)

# Memory usage of objects in batch (GB):

# imgs: 0.27648

# boxes: 4.6080000000000006e-05

# labels: 2.8800000000000004e-06

# masks: 1.8432000000000002

print('Memory allocated: ', torch.cuda.max_memory_allocated() * 1e-9, 'GB,', 'memory reserved: ', torch.cuda.max_memory_reserved()*1e-9, 'GB')

# Memory allocated: 0.189653504 GB, memory reserved: 0.20132659200000003 GB

model.train()

output = model(x, y)

print('Memory allocated: ', torch.cuda.max_memory_allocated() * 1e-9, 'GB,', 'memory reserved: ', torch.cuda.max_memory_reserved()*1e-9, 'GB')

# Memory allocated: 21.554223616 GB, memory reserved: 22.085107712000003 GB

torch.cuda.empty_cache()

print('Memory allocated: ', torch.cuda.max_memory_allocated() * 1e-9, 'GB,', 'memory reserved: ', torch.cuda.max_memory_reserved()*1e-9, 'GB')

# Memory allocated: 21.554223616 GB, memory reserved: 22.085107712000003 GB

loss = sum(loss for loss in output.values())

loss.backward()

# torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 1.08 GiB (GPU 0; 23.62 GiB

# total capacity; 20.45 GiB already allocated; 869.31 MiB free; 20.98 GiB reserved in total by PyTorch) If

# reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See

# documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

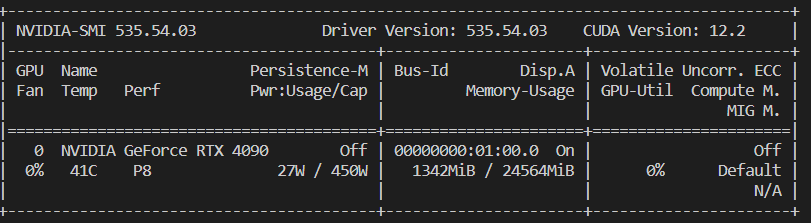

Also, here you can see the nvidia-smi output right before running the code. As you can see the GPU is largely unoccupied.