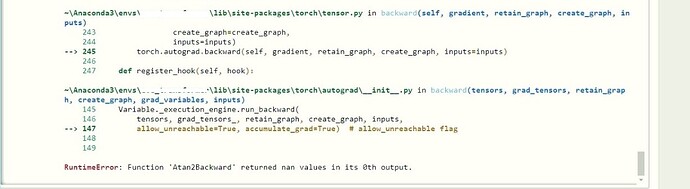

I am getting nan values as my loss in the model having MSE and euclidean loss as the only loss functions. I used torch.detect_anomaly to get this error. I tried clipping gradients and adding epsilon terms to the losses, but there was no change.

Does anyone have any ideas on how I can solve/debug it further? Can it be a GPU related error?

I guess your input to torch.atan2 might create invalid gradients as seen here:

x = torch.zeros(4, requires_grad=True)

y = torch.zeros(4)

out = torch.atan2(x, y)

out.mean().backward()

print(x.grad)

> tensor([nan, nan, nan, nan])

and as is pointed to in the error message.

2 Likes

Thanks a lot for the reply. Can you please point out to some loss functions/possible computations where torch.atan2 might have occurred as I haven’t used torch.atan2 anywhere directly in my implementation. As I mentioned in the query, only loss functions I use are MSE and Euclidean.

Euclidean(a,b):

squared_difference = torch.pow(a - b, 2)

ssd = torch.sum(squared_difference, axis=tuple(range(1, a.ndim)))

return torch.sqrt(ssd)

I don’t know, where it’s used as I cannot see the model definition.

In case you cannot find the usage, you could use the “brute force” approach of printing the .grad_fn attribute of all intermediate tensors and check, where Atan2Backward is shown.