Hi, all. I am trying to reproduce a random warping algorithm in SketchEdit: Mask-Free Local Image Manipulation with Partial Sketches supplemental. I also would like to apply it as a pre-processing method to train my network.

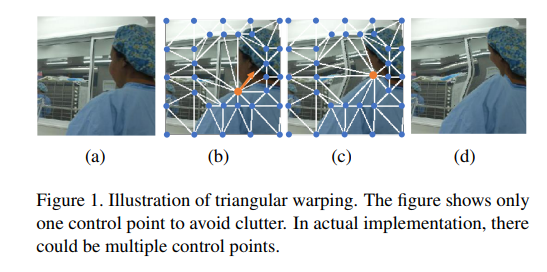

Original Algorithm: As shown in Figure 1. They randomly sample some control points (blue points in Figure 1 (b)) and construct a triangular mesh using the Delaunay triangulation method. To warp the

modification area, we randomly move the control points by a small amount (∆x, ∆y) and apply the affine transformation on each triangle associated the new location.

My solution with skimage:

img = READ_YOUR_IMAGE

image = resize(img, (256,256))

SINCE = time.time()

rows, cols = image.shape[0], image.shape[1]

num_point = 17

#creat original image mesh

src_cols = np.linspace(0, cols, num_point)

src_rows = np.linspace(0, rows, num_point)

src_rows, src_cols = np.meshgrid(src_rows, src_cols)

src = np.dstack([src_cols.flat, src_rows.flat])[0]

#create dst image mesh

dst_rows = np.array(src[:, 1])

dst_cols = np.array(src[:, 0])

#move a point by 9 pixel

dst_rows[6*num_point+9] += -9

dst = np.vstack([dst_cols, dst_rows]).T

#estimate transformation from the given src and dst mesh

tform = PiecewiseAffineTransform()

tform.estimate(src, dst)

out_rows = rows

out_cols = cols

#transform image with the estimated transformation

out = warp(image, tform, output_shape=(out_rows, out_cols))

#processing time

print(time.time()-SINCE)

#display image

fig, ax = plt.subplots(1, 2, figsize=(18, 9))

ax[0].imshow(out)

ax[0].plot(tform.inverse(src)[:, 0], tform.inverse(src)[:, 1], '.b')

ax[0].axis((0, out_cols, out_rows, 0))

ax[1].imshow(image)

ax[1].plot(src[:, 0], src[:, 1], '.b')

ax[1].axis((0, out_cols, out_rows, 0))

plt.show()

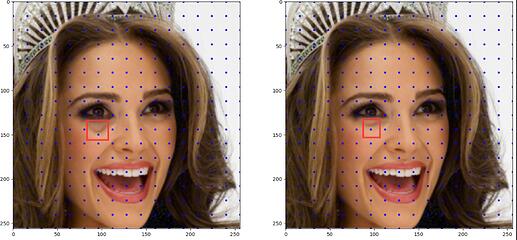

The result is shown above, the red rectangle highlights the selected point. However, it takes 0.2s to finish the transformation. For a large dataset (~25, 000 images), it will take an extra ~1.4h to process images per epoch, which heavily increase the whole training time. Thus, I wonder if it can be accelerated if it can be processed on GPU. But I can only find some random affine transformations in torchvision.transforms.RandomAffine. Is there any similar function that can transform an image with the given src and dst mesh in pytorch?

Thank you!