torch.istft spends almost all its time in the NOLA check.

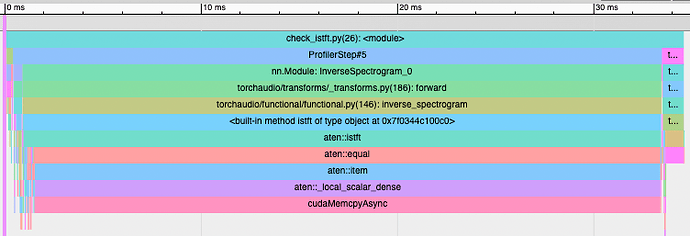

Below is a screenshot of the profiling of stft followed by istft of a signal of shape (32, 4, 2, 7 * 44100). This is just a toy example, but when the istft is part of a larger neural network, it causes everything to synchronize.

I found the cause of the synchronization (see code trail below). But I am wondering if there is any way around this to avoid the synchronization.

From the ATen/native/SpectralOps.cpp#L1154

if (at::is_scalar_tensor_true(window_envelop_lowest)) {

std::ostringstream ss;

REPR(ss) << "window overlap add min: " << window_envelop_lowest;

AT_ERROR(ss.str());

}

where, the call to is_scalar_tensor_true calls at::equal function at ATen/TensorSubclassLikeUtils.h#L84

inline bool is_scalar_tensor_true(const Tensor& t) {

TORCH_INTERNAL_ASSERT(t.dim() == 0)

TORCH_INTERNAL_ASSERT(t.scalar_type() == kBool)

return at::equal(t, t.new_ones({}, t.options()));

}

which in turn calls ATen/native/cuda/Equal.cpp#L29

return at::cuda::eq(self, src).all().item().to<bool>();

The .item() call does a memcopy from device to host and also synchronizes all operations on the GPU.

Is it possible to maybe allow users to bypass the NOLA check if they want?