I am learning the code in [GitHub - fab-jul/L3C-PyTorch: PyTorch Implementation of the CVPR'19 Paper "Practical Full Resolution Learned Lossless Image Compression"], and I am facing an issue because I want to run it on Nvidia A100. However, A100 only supports CUDA 11, not CUDA 10.1, which is required for installing PyTorch 1.4 or lower.

This code runs correctly on PyTorch 1.1-1.4 with CUDA 10.1, but it does not work correctly on PyTorch versions higher than 1.5. When I use PyTorch > 1.5 to train this code, it introduces noise in the generated images.

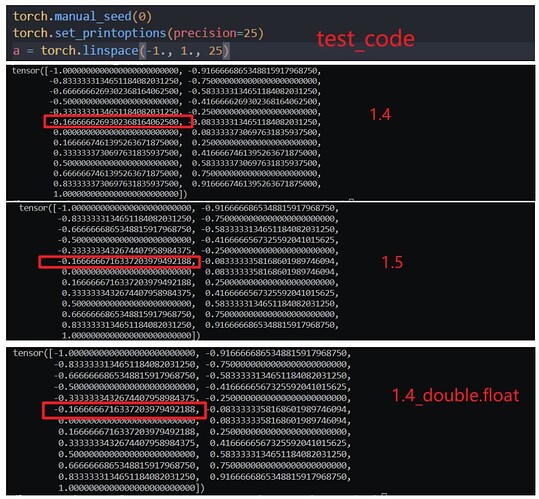

During my debugging process, I discovered that PyTorch 1.5 updated torch.linspace, and PyTorch 1.6 updated torch.nn.Conv2d.

No, you won’t be able to use CUDA 11 in PyTorch 1.4 as 1.4.0 was released before CUDA 11 came out and is thus not compatible. You could of course try to cherry-pick needed changes into a custom 1.4 branch but this sounds like a lot of unnecessary work.

I would recommend updating to the latest PyTorch release instead.

Yes, I already updated my PyTorch, but it introduced a lot of noise. I’m unsure how to resolve this issue. As I mentioned earlier, I noticed that torch.linspace behaves differently in PyTorch versions 1.4 and 1.5.

Could you post a code snippet showing the different behaviors, please?

As you can see, the results of test_code differ between PyTorch versions 1.5 and 1.4. I believe this discrepancy might be due to a precision issue(double and float). To investigate further, I attempted to use torch.linspace(-1., 1., 25, dtype=torch.double).float() in PyTorch 1.4, and I obtained the same output as in version 1.5.

Based on this observation, I don’t think the PyTorch version is the reason why L3C cannot be trained in higher versions. To explore this further, I ran the L3C code with PyTorch 1.4(I use dtype=torch.double).float() ) and noticed the introduction of noise in the results.

I also don’t think these expected small numerical differences caused by the limited floating point precision are to blame for any real issues.

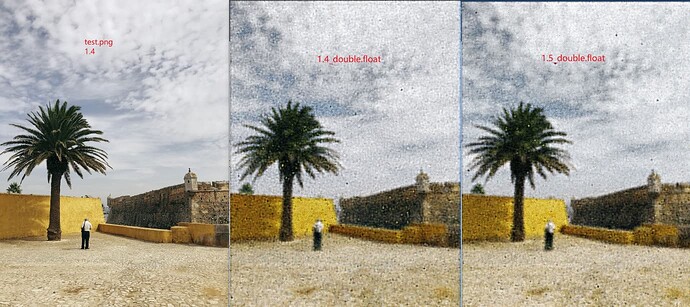

To ensure that I’m not running the code in the wrong virtual environment, I’m trying every possibility. I will post the images obtained from running the code under different versions and precision later. Thank you for your time.

Hi, here are the results:

In PyTorch 1.4, the quality is the same as in test.png. However, when I used double.float in PyTorch 1.4, it introduced noise. In PyTorch 1.5, regardless of whether double.float was used, it still introduced noise.