I am trying to implement Fast Neural Style Transfer on iOS application. To do that, I started with an implemented version of Fast Neural Style Transfer from here and attempt to follow Pytorch Mobile tutorial by @xta0 to run the model on my device.

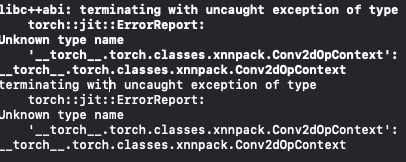

After generating the model.pt file, I tried to load it following the tutorial code using TorchModule. However, I got this error when loading the module.

Unknown type name ‘torch.torch.classes.xnnpack.TransposeConv2dOpContext’: Serialized File “code/torch/transformer/___torch_mangle_391.py”, line 18

annotations[“prepack_folding._jit_pass_packed_weight_11”] = torch.torch.classes.xnnpack.Conv2dOpContext annotations[“prepack_folding._jit_pass_packed_weight_12”] = torch.torch.classes.xnnpack.Conv2dOpContext annotations[“prepack_folding._jit_pass_packed_weight_13”] = torch.torch.classes.xnnpack.TransposeConv2dOpContext ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ <— HERE

annotations[“prepack_folding._jit_pass_packed_weight_14”] = torch.torch.classes.xnnpack.TransposeConv2dOpContext annotations[“prepack_folding._jit_pass_packed_weight_15”] = torch.torch.classes.xnnpack.Conv2dOpContext (lldb)

Within Xcode, the code fails here

private lazy var module: TorchModule = {

// Verified that filePath exist.

if let filePath = Bundle.main.path(forResource: "mobile_model2", ofType: "pt"),

// The problem is at TorchModule init setup.

let module = TorchModule(fileAtPath: filePath) {

return module

} else {

fatalError("Can't find the model file!")

}

}()

Code fails specifically at torch::jit::load

- (nullable instancetype)initWithFileAtPath:(NSString*)filePath {

self = [super init];

if (self) {

try {

// CODE FAILS HERE, when trying to load!

_impl = torch::jit::load(filePath.UTF8String);

_impl.eval();

} catch (const std::exception& exception) {

NSLog(@"%s", exception.what());

return nil;

}

}

return self;

}

This means that the C++ load method call wasn’t able to load my model.pt. What could possibly caused this?

In order to verify that this issue is not solely caused by my model, I tried loading this with another pretrained model resnet18. And as expected, I also receive errors while attempting to load this model using TorchModule, although with a different set of errors.

**Unknown builtin op: aten::_add_relu_.**

**Could not find any similar ops to aten::_add_relu_. This op may not exist or may not be currently supported in TorchScript.**