I am trying to implement a simple producer/consumer pattern using torch multiprocessing with the SPAWN start method. There is one consumer, the main process, and multiple producer processes.

The consumer process creates a pytorch model with shared memory and passes it as an argument to the producers. The producers use the model to generate data objects which are added to a Queue which is also passed as an argument from the consumer.

After starting the producers, the consumer will wait for items from the queue and process them in batches.

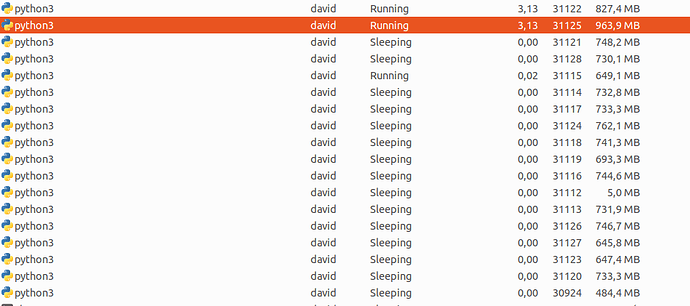

This works just fine at the start, but dramatically slows down after only a few minutes. From what I can tell, the producers initially generate data very rapidly but then progressively slow down after each consumer batch processing step. RAM and GPU memory both stay lower than 50% so memory leaks are not the issue. GPU utilization is also very low. From what I can tell, some of the producers simply silently stop producing. I can see in the system monitor that at any given moment, most of the processes are sleeping.

What could be going on here?