import torch.multiprocessing as mp

import torch

import numpy as np

import time

def read(q,b):

for i in range(1000):

start = time.time()

for j in range(100):

temp = q.get()

b.wait()

end = time.time()

print('read', str(end-start))

def write(q,b):

local = torch.ones(3000,3000)

# local = np.ones((3000,3000))

# local = [[1 for _ in range(3000)] for _ in range(3000)]

for i in range(1000):

start = time.time()

for j in range(100):

q.put(local)

b.wait()

end = time.time()

print('write', str(end-start))

def mp_handler(q, b):

p = mp.Process(target=read, args=(q,b))

p2 = mp.Process(target=write, args=(q,b))

p.start()

p2.start()

p.join()

p2.join()

if __name__ == "__main__":

start = time.time()

q = mp.Queue()

bar = mp.Barrier(2)

mp_handler(q, bar)

end = time.time()

print('Finished', str(end - start))

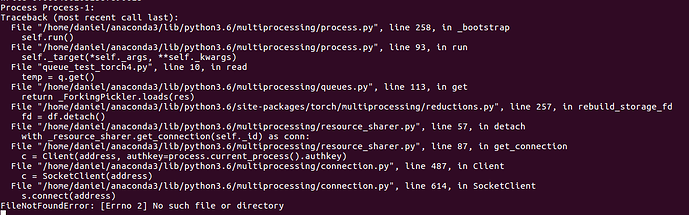

When I comment out those two b.wait() lines I get the error above. But the write process runs really fast before it crashes. Is there an alternative to barrier to speed things up? Am I using barrier properly in this case?