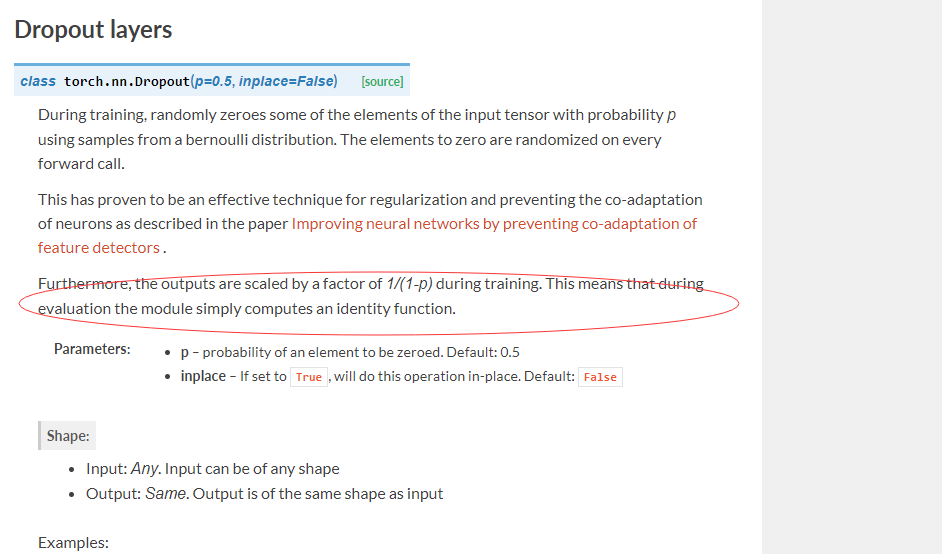

In the class “torch.nn.Dropout (p=0.5, inplace=False)”, why the outputs are scaled by a factor of 1/1−p during training ? In the papers “Dropout: A Simple Way to Prevent Neural Networks from Overting” and “Improving neural networks by preventing co-adaptation of feature detectors”, the output of the dropout layer are not scaled by a factor of 1/1−p .

Dropout is scaled by 1/p to keep the expected inputs equal during training and testing.

Have a look at this post for more information.

Where did you notice the 1/(1-p) scaling?

Sorry, that was my mistake!

From the original paper:

If a unit is retained with probability p during training, the outgoing weights of that unit are multiplied by p at test time as shown in Figure 2. This ensures that for any hidden unit the expected output (under the distribution used to drop units at training time) is the same as the actual output at test time.

Since PyTorch uses p as the drop probability (as opposed to the keep probability) you are scaling it with 1/(1-p).

In the paper, p is the retention probability . In pytorch, p is the drop probability, the corresponding retention probability is 1-p, indicating that unit is retained with probability 1-p during training, for the retained units, should be unchanged, why are scaled by a factor of 1/(1-p) . During testing, each unit should also be multiplied by (1-p).

That’s correct! You would have two options.

The option you mention is to use the keep probability (1-p) during training and just multiply with it during testing.

This would however add an additional operation during test time.

We could avoid this operation by scaling during training.

So the second option is to scale the units using the reciprocal of the keep probability during training, which yields the same result and is as far as I know the standard implementation for dropout.

As the training is expensive we can just add this scaling, and keep the inference as fast as possible.

I understand it . Thank you very much!