Hi,

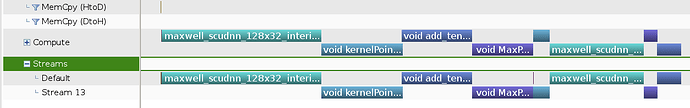

When I execute a torch.nn model in the context of a CUDA stream, some of the kernels run on this stream, while others are executed on the default stream. See profiler output and sample program below. Is there a way to run all the kernels in the context of the custom stream?

Thanks

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

class MNISTConvNet(nn.Module):

def __init__(self):

super(MNISTConvNet, self).__init__()

self.conv1 = nn.Conv2d(1, 10, 5)

self.pool1 = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(10, 20, 5)

self.pool2 = nn.MaxPool2d(2, 2)

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

self.cuda(0) # compute on GPU

def forward(self, input):

x = self.pool1(F.relu(self.conv1(input)))

x = self.pool2(F.relu(self.conv2(x)))

x = x.view(x.size(0), -1)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

return x

def main():

with torch.cuda.stream(torch.cuda.Stream()):

n = 100000

net = MNISTConvNet()

print(net)

input = Variable(torch.randn(n, 1, 28, 28).cuda(0))

out = torch.FloatTensor(n, 10).pin_memory()

out.copy_(net(input).data, async=True)

torch.cuda.synchronize()

print(out.size())

if __name__ == '__main__':

main()