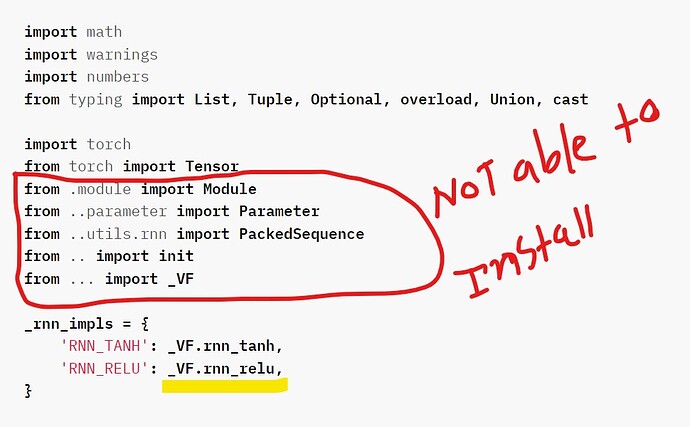

for my project work, I had to go through the documentation of TORCH.NN.MODULES.RNN and there I wanted to know exactly which relu activation function is used because I am building RNN from scratch using trained weights and biases. so my self coded models answers were matching with answers of TORCH.NN.MODULES.RNN when I used ‘Tanh’ activation function but when I am using ‘ReLU’ activation function from nn.RELU, my answers are not matching at all and I tried a lot to run given libraries into google collab so that I can also use the same code and get my problem solved but I couldn’t find any clue which can give me solution even after even after installing necessary libraries like pydot. more precisely I want to run that _VF.rnn_relu line.