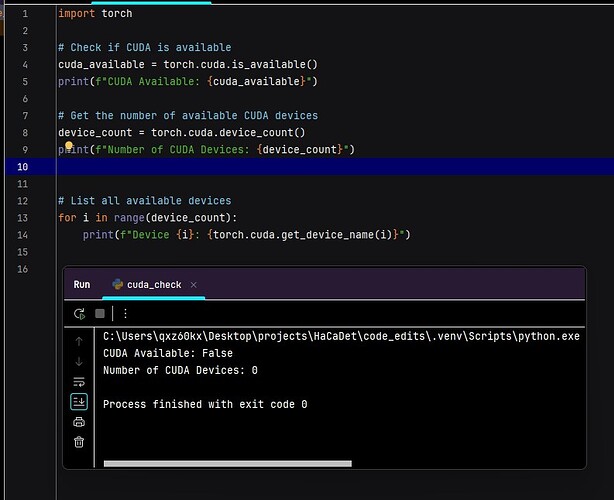

I have HP ZBook Fury 16 G10 laptop with a 12 GB NVIDIA RTX 3500 Ada GPU and I have to use this for my AI project which is written in Python language. I have already installed the CUDA Toolkit 12.8 inside the laptop, which easily recognizes the GPU. But when I load the GPU inside the Python program, the pytorch is unable to recognize the GPU. I use Windows 11.

Note: I have restrictions to install anaconda in my system.

Both nvcc --version command and nvidia-smi command works and shows cuda version 12.8

I have followed the torch installation commands in the pytorch official website:

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu128

It installs torch 2.7.0

How to enable torch with cuda inside the python environment?

What does print(torch.version.cuda) return?

It returns ‘None’.

What might be the real issue? @ptrblck

You did not install a PyTorch binary with CUDA dependencies and most likely installed a CPU-only build. Just run pip install torch on Linux (for CUDA 12.6) or select the desired CUDA runtime dependency from our install matrix.

I use Windows 11. Whenever I run the command from install matrix, it returns requirements already satisfied.

Uninstall all previously installed PyTorch binaries as obviously the CPU-only binary is still found and used.

I have figured out the real problem. This was my work PC which have security guidelines regarding installation of PIP packages in Python. My company had their own repository for PyPi packages and I index this link during pip installations. When I went into the repository, I found that all the torch installations available in the company repo are CPU binaries. So, even if i use the command to install CUDA binary, only CPU binary was being installed.

So I have installed the pip packages from my home network without connecting to company VPN.  This directly installed torch compiled with CUDA.

This directly installed torch compiled with CUDA.

Thank you very much for all the help.

May Allah bless and guide you.