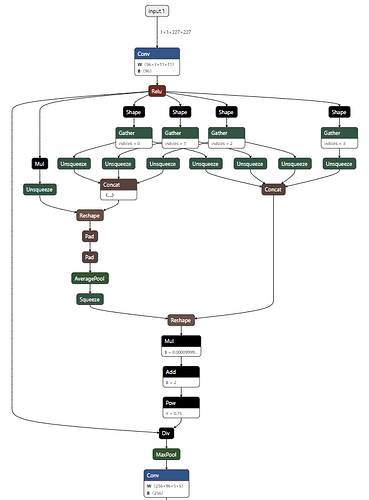

When I converted nn.LocalResponseNorm(size=5, alpha=1e-4, beta=0.75, k=2.) to onnx using torch.onnx.export, lots of layers are generated, and consume time more in inference. The code and onnx model are shown below.

class AlexNetADDCStandard_LRN(nn.Module):

def __init__(self, num_classes: int = 1000, dropout: float = 0.5):

#def __init__(self, num_classes=1000):

super(AlexNetADDCStandardLRN, self).__init__()

self.features = nn.Sequential(

#nn.Conv2d( 3, 96, kernel_size=11, stride=4, padding=2),

nn.Conv2d( 3, 96, kernel_size=11, stride=4, padding=0),

nn.ReLU(inplace=True),

nn.LocalResponseNorm(size=5, alpha=1e-4, beta=0.75, k=2.), # add LRN

#nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(96, 256, kernel_size=5, padding=2),

...

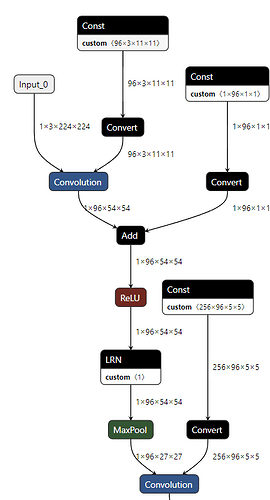

However, onnx suppotrs a LRN operation layer independently, and I found that below model is possble. Can torch.onnx.export generate just one LRN layer ??