111114

August 29, 2023, 4:35am

1

Hello

Thank you

torch.onnx.export(

model,

(torch.randn(3, 80, 355), torch.ones(3, 355)),

"./onnx/asr.onnx",

export_params=True,

opset_version=14,

input_names=["input_features", "feature_mask"],

output_names=["logits"],

dynamic_axes={

"input_features" : {0 : "batch_size", 2 : "sequence_length"},

"feature_mask" : {0 : "batch_size", 1 : "sequence_length"},

"logits" : {0 : "batch_size", 1 : "half_sequence_length"}

}

)

QJ-Chen

September 4, 2023, 12:44pm

2

Same problem with torch.version ‘2.0.1+cu117’

111114

September 6, 2023, 6:50am

3

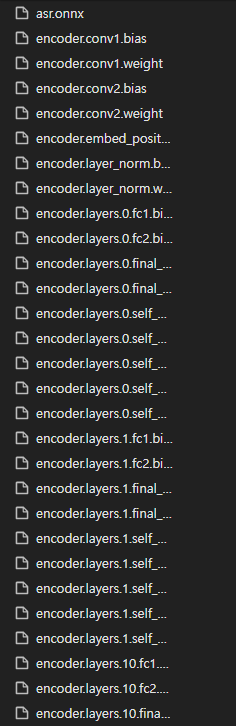

Hello, When I inputs torch jit traced model to torch.onnx.export, all weights packed in asr.onnx file.

traced_model = torch.jit.trace(model, [dummy_input_features, dummy_feature_mask])

torch.onnx.export(

traced_model,

(torch.randn(3, 80, 355, dtype=torch.float16, device="cuda:0"), torch.ones(3, 355, dtype=torch.float16, device="cuda:0")),

"./onnx/asr.onnx",

export_params=True,

opset_version=14,

input_names=["input_features", "feature_mask"],

output_names=["logits"],

dynamic_axes={

"input_features" : {0 : "batch_size", 2 : "sequence_length"},

"feature_mask" : {0 : "batch_size", 1 : "sequence_length"},

"logits" : {0 : "batch_size", 1 : "half_sequence_length"}

}

)

QJ-Chen

September 6, 2023, 7:35am

4

Jit traced model not works for me. Putting the model to cuda and changing the dtype to float16 (half) works for me as you also did. Maybe that’s the solution.