I ran my model with the torch profiler enabled, based on the example provided here - PyTorch Profiler — PyTorch Tutorials 2.2.0+cu121 documentation

with profiler.profile(record_shapes=True) as prof:

with profiler.record_function(“model_inference”):

model(inputs)

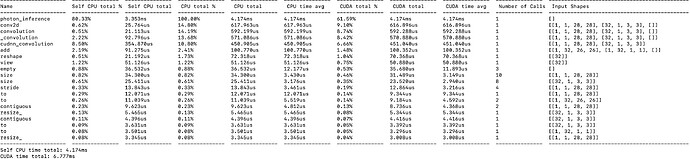

The resulting table has a large number for model_inference time, but the sum of all the individual calls don’t add up to this number. I’ve checked all columns - self cpu, cpu total, cuda total etc. Does this imply that there is some time that is not accounted for in the profile? How should I interpret model_inference time? How can I go about figuring out where that extra time is going? Any suggestions are much appreciated.