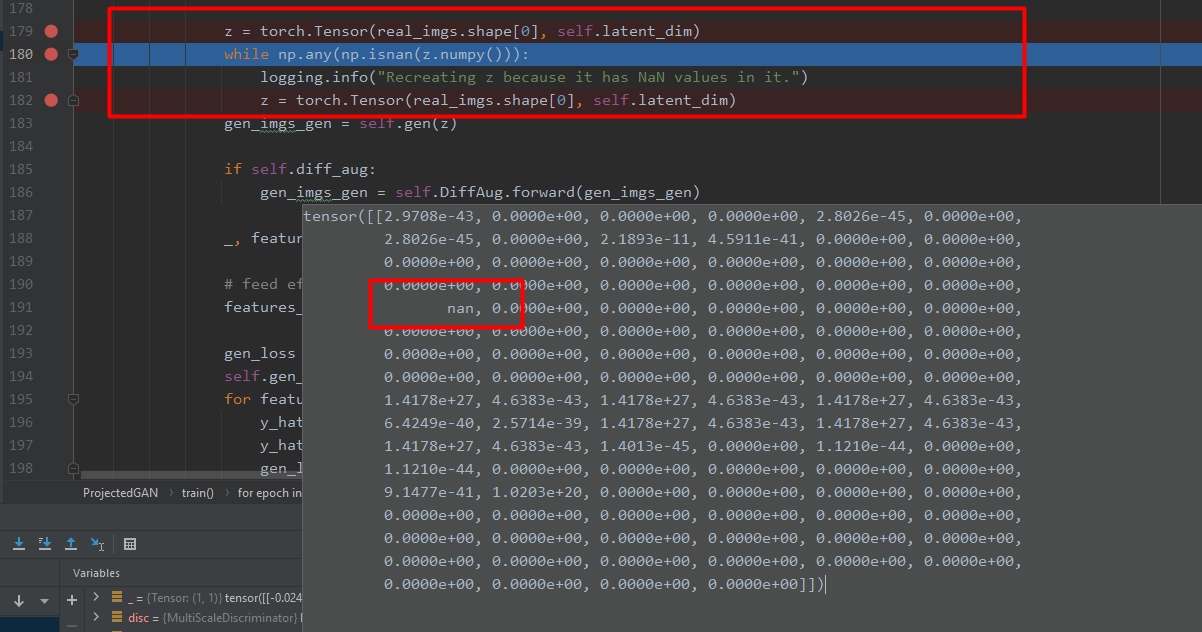

Im experiencing something really weird. The torch.randn operation recurrently gives me NaN values in some positions in the tensor.

It gets weirder: the while loop sometimes never gets exited. It seems not to be “random” at this point if NaN values get produced if it once gets caught in it.

And it gets even weirder: When trying the same code, for producing the random latent code, outside in a separate file, this never happens. It never creates any NaN values when I run it in this separate file.

I have no idea what to do about this. Did anyone else experience this?

(I also have the whole code on github if that helps: https://github.com/dome272/ProjectedGAN-pytorch/blob/main/projected_gan.py#L142