che85

January 23, 2020, 8:23pm

1

Hi all,

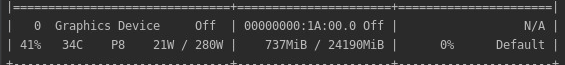

I am trying to figure out, why torch allocates so much memory for a tensor that at least to me doesn’t seem it should allocate this much memory:

weights = torch.tensor([0.3242]).cuda()

This tensor allocates more than 737MB on my GPU and I have absolutely no idea why this would happen.

I am using torch1.1 but also tried with torch 1.3 which results in more than 790MB memory allocation.

Neither

weights = weights.cpu()

torch.cuda.empty_cache()

or

weights = weights.detach().cpu()

torch.cuda.empty_cache()

NOR

del weights

torch.cuda.empty_cache()

have any effect. The memory stays allocated.

Does anyone know what to do in this case?

Thanks a lot.

The CUDA context will be created on the device before the first tensor is created, which will use memory.

1 Like

mrartemev

December 20, 2020, 9:30am

3

Hi!

ptrblck

December 21, 2020, 5:53am

4

Unfortunately that’s not easily doable, as it depends on the CUDA version, the number of native PyTorch kernels, the number of used compute capabilities, the number of 3rd party libs (such as cudnn, NCCL) etc.nvidia-smi.

1 Like