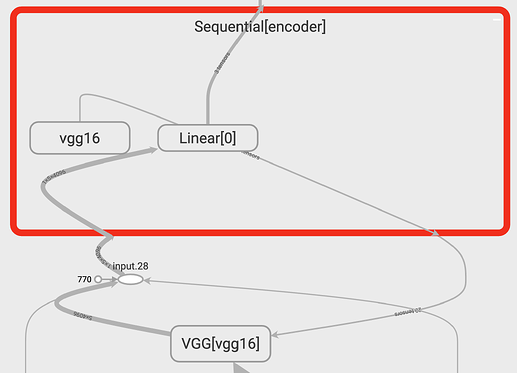

I built a video encoder made up of a simple combination of VGG + Linear layer

class Encoder(torch.nn.Module):

def __init__(self, emb_dimension):

super(Encoder, self).__init__()

self.vgg16 = vgg16

self.encoder = torch.nn.Sequential(

torch.nn.Linear(4096, emb_dimension),

# torch.nn.ReLU()

)

def forward(self, x):

batch_size, time_steps, *dims = x.shape

x = x.view(batch_size * time_steps, *dims)

x = vgg16(x)

x = x.view(batch_size, time_steps, -1)

x = self.encoder(x)

return x

I don’t get why the tensorBoard graph shows 20 tensors coming back from the sequential layer to VGG. I am afraid it might affect the gradient calculation.

Anyone has any idea?