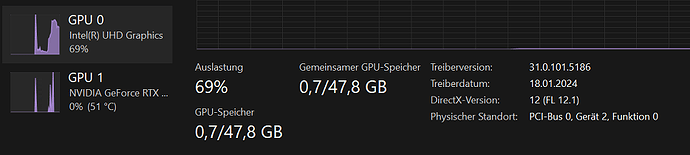

I am using a laptop with two GPUs. The first one is a Intel(R) UHD Graphics and the second one is a NVIDIA GeForce RTX 4090 Laptop GPU. I want to run a RAG application using a llama3 model using the second GPU. When I run it, the model is loaded into memory. Afterwards the second GPU is used only until the streaming of the output starts. When streaming only the first GPU is used. This can be seen by running the application and watching the performance with the task manager.

The problem can be reproduced with the following code:

import torch

import os

from transformers import AutoModelForCausalLM, AutoTokenizer, TextIteratorStreamer

from pathlib import Path

torch.set_default_device("cuda")

dir_ = Path(__file__).parent

model_path = "my_path"

tokenizer = AutoTokenizer.from_pretrained(model_path, device_map="cuda")

model = AutoModelForCausalLM.from_pretrained(

model_path,

torch_dtype=torch.bfloat16,

device_map="cuda",

load_in_8bit=True

)

model.generation_config.pad_token_id = tokenizer.pad_token_id

terminators = [

tokenizer.eos_token_id,

tokenizer.convert_tokens_to_ids("<|eot_id|>")

]

def respond(message):

messages = []

user_message = {"role": "user", "content":

"""

Frage: '{query}'

""".format(query=message)}

messages.extend([{"role": "system", "content": 'Du bist ein Assistent, der Fragen beantwortet.'},

]

)

messages.append(user_message)

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

model_inputs = tokenizer([text], return_tensors="pt").to('cuda:0')

generated_ids = model.generate(

model_inputs.input_ids,

do_sample=True,

temperature=0.01,

top_p=0.9

)

answer = tokenizer.decode(generated_ids[0], skip_special_tokens=True)

answer = answer.split('assistant')[-1]

return answer

print(respond('Welche Sehenswürdigkeiten gibt es in Berlin?'))

I checked that Cuda is using the right device by typing python -m torch.utils.collect_env in the console. This resulted in:

<frozen runpy>:128: RuntimeWarning: 'torch.utils.collect_env' found in sys.modules after import of package 'torch.utils', but prior to execution of 'torch.utils.collect_env'; this may result in unpredictable behaviour

Collecting environment information...

PyTorch version: 2.4.0+cu124

Is debug build: False

CUDA used to build PyTorch: 12.4

ROCM used to build PyTorch: N/A

OS: Microsoft Windows 11 Pro

GCC version: Could not collect

Clang version: Could not collect

CMake version: Could not collect

Libc version: N/A

Python version: 3.11.7 (tags/v3.11.7:fa7a6f2, Dec 4 2023, 19:24:49) [MSC v.1937 64 bit (AMD64)] (64-bit runtime)

Python platform: Windows-10-10.0.22631-SP0

Is CUDA available: True

CUDA runtime version: 12.4.131

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 4090 Laptop GPU

Nvidia driver version: 551.78

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture=9

CurrentClockSpeed=2200

DeviceID=CPU0

Family=207

L2CacheSize=32768

L2CacheSpeed=

Manufacturer=GenuineIntel

MaxClockSpeed=2200

Name=13th Gen Intel(R) Core(TM) i9-13900HX

ProcessorType=3

Revision=

Versions of relevant libraries:

[pip3] mypy-extensions==1.0.0

[pip3] numpy==1.26.3

[pip3] onnxruntime==1.16.3

[pip3] torch==2.4.0+cu124

[pip3] torchaudio==2.4.0+cu124

[pip3] torchvision==0.19.0+cu124

[conda] Could not collect

I also tried

print(torch.cuda.is_available())

print(torch.cuda.device_count())

print(torch.cuda.current_device())

print(torch.cuda.get_device_name(0))

and got

True

1

0

'NVIDIA GeForce RTX 4090 Laptop GPU'

As far as I understand, everything runs with cuda and since the NVIDIA GPU is the only cuda device, only this GPU should be used.

Does somebody know how to use the NVIDIA GPU only when running the application? Why is the Intel GPU used when it is not even detected by cuda?