I have exported my model to torchscript formate by torch.jit.trace

BUT, when i do inference use the script model, I need 3 times GPU memory than checkpoint,

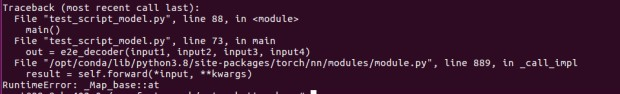

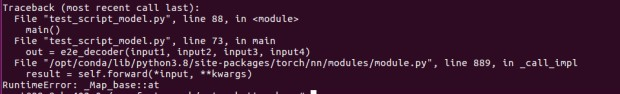

However when i add with torch.no_grad() at the begining of inference I cat just do once, the second time do inference I always get the error:

I have add model.eval and with torch.no_grad() when i traced my model. But, how to deal with the memory usage problem?

The state_dict only stores the registered buffers and parameters of the model, which might be smaller than the forward activations needed to calculate the gradients created during the forward pass.

no_grad() disables storing the forward activations as no gradients are calculated during inference which would explain why this approach seems to work.

I don’t see the error message so don’t know what’s happening.

Could you post the error message by wrapping it into three backticks ```, please?