Following the example here, trying to implement transforms in customized Dataset.

from torchvision import transforms as T

normalize = T.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]) # from ImageNet

t = T.Compose([

T.RandomResizedCrop(224),

T.RandomHorizontalFlip(),

T.ToTensor(),

normalize

])

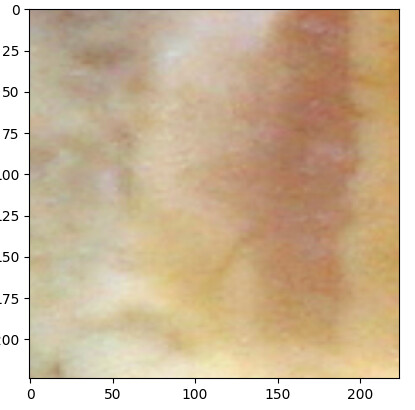

Since my RGB image size is huge I am tiling the images to before applying transforms in Custom_dataset.

def __getitem__(self, index):

im = Image.open(self.imList[index]).convert('RGB')

mk = Image.open(self.mkList[index]).convert('L') # binary mask

im = np.asarray(im)

mk = np.asarray(mk)

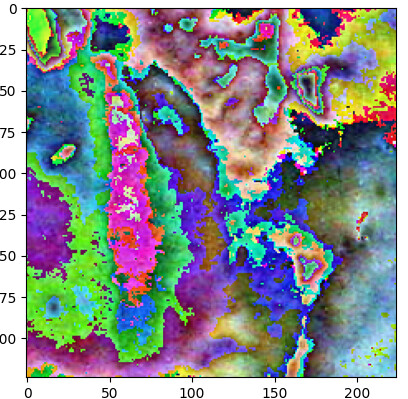

im, mk = patchify(im, mk, [224, 224], [100, 100])

i_t_s = torch.empty([64, 3, 224, 224]) # size of im

m_t_s = torch.empty([64, 224, 224]) # size of mk

for l in range(0, len(im)): # for 64 small image patches

PIL_i = Image.fromarray(im[l, ...]).convert('RGB')

PIL_m = Image.fromarray(mk[l, ...]).convert('L')

i_t = self.tform(PIL_i) # [3, 224, 224]

m_t = T.ToTensor()(PIL_m)

i_t_s[l, ...] = i_t

m_t_s[l, ...] = m_t

m_t_o = torch.ones(2, 64, 224, 224)

m_t_o[1, ...] = m_t_s == 1.

m_t_o[0, ...] = m_t_o[0, ...] - m_t_o[1, ...]

m_t_o = torch.transpose(m_t_o, 1, 0) #, 2, 3)

return i_t_s, m_t_o # size --> [64, 3, 224, 224], [64, 2, 224, 224]

Quesion:

- How do I apply the same transformation in both image and paired mask patch?

-

T.Normalizemust be on Tensor and afterT.ToTensor()it changes image values from [0, 1] to some normalized value beyond 0 and 1. What is the way to normalize and thenT.ToTensor()to keep the value within the range [0, 1]

N.B. patchify creates paired patches of RGB and binary mask.