Hello,

I am trying to implement transformation to a set of images.

x = Normalized [0,1] FloatTensor with dimensions of [40479, 3, 40, 40] where 3 corresponds to the channel RGB.

I created a class (which I found on the web):

class CustomTensorDataset(Dataset):

"""TensorDataset with support of transforms.

"""

def __init__(self, tensors, transform=None):

assert all(tensors[0].size(0) == tensor.size(0) for tensor in tensors)

self.tensors = tensors

self.transform = transform

def __getitem__(self, index):

x = self.tensors[0][index]

if self.transform:

x = self.transform(x)

y = self.tensors[1][index]

return x, y

def __len__(self):

return self.tensors[0].size(0)

I then define the transformations

transformations = transforms.Compose([

transforms.ToPILImage(),

transforms.RandomHorizontalFlip(),

transforms.ToTensor()

])

Finally, I create the dataset with:

data_transform = CustomTensorDataset(tensors=(xx, yy), transform=transformations)

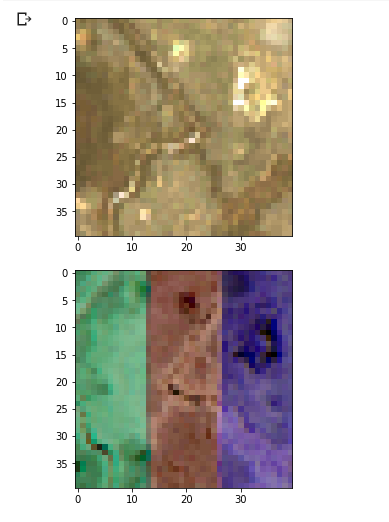

See the figure below. The top image is the original and the bottom image is the transformed image. As you can see, only the left and right panels are horizontally flipped. Is that normal?

Help would be appreciated. Merci!