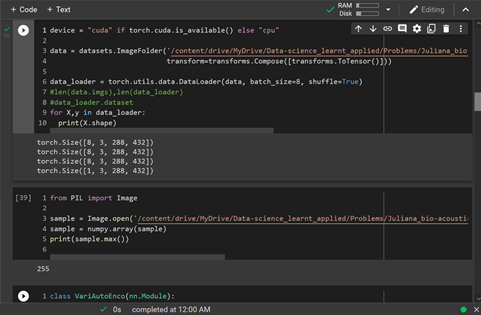

I’m working with spectrograms, each image is 288 * 432. ToTensor() isn’t working for me, I believe it should have brought height and width of each one between 0 and 1.

Can someone please help?

Thank you.

Hi,

Please refer to the docs and check whether your images do not fall into the cases when the image is returned without scaling. (range should be 0-255 etc.)

Thanks, I understand. What do you think is a good way to bring the resolution in [0-255]? RandomResizedCrop()? Or something else?

Thank you.

This is used to re-size the image (change the dimensionality) rather than to interfere with the pixel values.

As long as the pixel values are in the range 0-255 and the PIL image is in one of the modes (L, LA, P, I, F, RGB, YCbCr, RGBA, CMYK, 1), the scaling should work fine. See:

import numpy

from PIL import Image

import torch

import torchvision.transforms as transforms

imarray = numpy.random.rand(300, 300, 3) * 255

im = Image.fromarray(imarray.astype('uint8')).convert('RGB')

trans = transforms.ToTensor()

im_tens = trans(im)

im_tens = torch.where(im_tens>1.0, 1.0, 0.0)

print(im_tens.sum()) # tensor(0.)

You could use manual scaling in case your pixel values are outside 0-255.

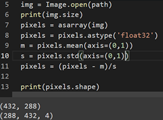

Thanks, Srishti. I was trying manual transformation. Wrote the following, not sure why it’s not working.

Could you please have a look?

Thank you.

Hi,

Could you please post your code enclosed with three backticks ```.

Please also provide an example of what transformations you’d like to do with your images.