Hello!

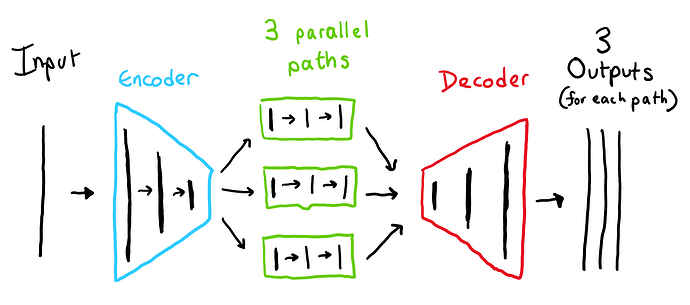

I am a bit of a noob here so forgive me if this has a simple answer. For context, I successively implemented a tied weight VAE a few months ago and now I am testing out some other ideas to do with autoencoders. My current model has three parallel paths going through it in an attempt to make three specialized and separate latent spaces.

I am experimenting with how to specialize the latent spaces, but that is not my concern here. This question is specifically about the loss. Right now I have a list of losses (one for each path) and I loop through the losses performing backpropagation for each.

for loss in local_loss_list:

lossy = loss

# backpropagation

optimizer.zero_grad()

lossy.backward(retain_graph=True)

# one step of the optmizer (using the gradients from backpropagation)

optimizer.step()

total_loss = total_loss + lossy

I did this automatically without much thought, but now I am concerned. Will the loss from each parallel path backpropagate properly down the correct path? If so how? It seems to work, but I do not understand how the loss is being associated with the correct path of weights.

Thanks!!!