Hey guys.

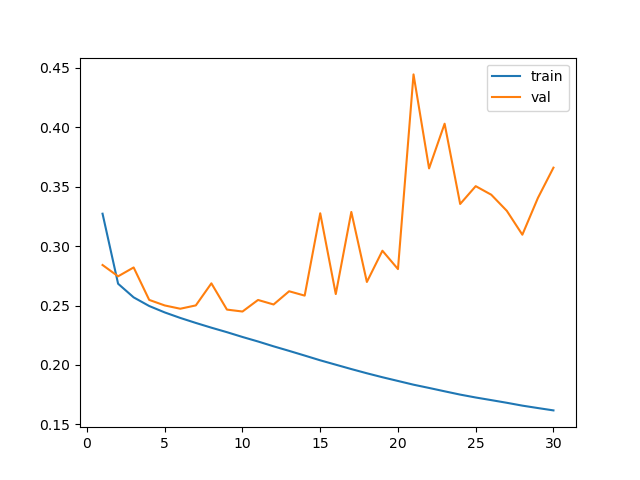

I am trying to implement a DCNN for the image reconstruction task. I am facing a weird issue with my end results on the test set vs training and validation loss curve.

My end results look great, however, there’s a huge difference between training loss and validation loss, which is a clear sign of overfitting. Could anyone suggest the reason behind this?

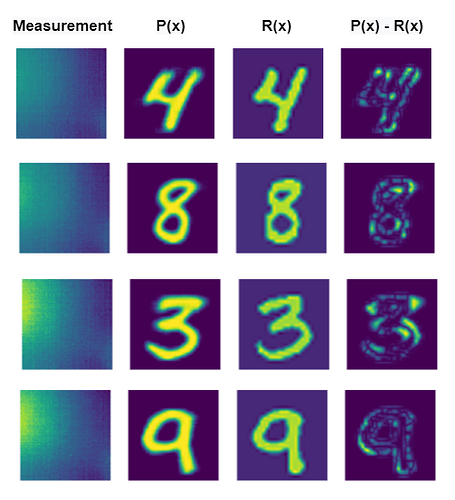

P(X) is a predicted image and R(X) is the target image.

Hypermeter settings

epochs = 50

batch size = 64

LR = 0.001

Shuffle training data = True