3DTOPO

August 6, 2020, 9:05pm

1

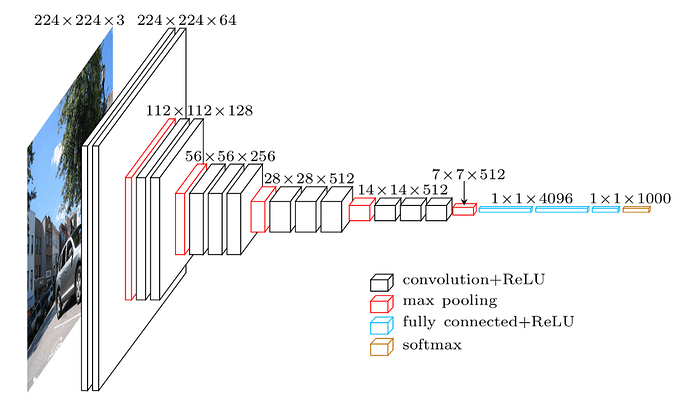

I would like to train VGG with a higher resolution, say 448 instead of the standard 224 training image.

First, how is the size for the fully connected layer calculated?

Then I was wondering, is it possible to interpolate the standard 224 weights to 448 so I can start with interpolated weights instead of a blank slate?

I’m just getting started with pytorch so any examples would be greatly appreciated.

The input size of fully connected is calculated as the output image size(H x W) and number of channels (n_C) of the last pool layer. (eg. 7 x 7 x 512 )

import torch

import torch.nn as nn

from torchvision.models import vgg19

class VGG(nn.Module):

def __init__(self, num_classes=1000):

super(VGG, self).__init__()

self.model = vgg19(pretrained=False) # pretrained=False just for debug reasons

self.model.classifier = nn.Sequential(

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, num_classes),

)

def forward(self, x):

x = self.model(x)

return x

print(VGG())

model = VGG()

x = torch.randn((1, 3, 448, 448))

print(model(x).shape)