Hi there.

I am trying to rebuild a model from a research paper. No errors happen, but the accuracy metric is not is not changing. I tried lots of different solutions, and most of them usually ends up in errors that I have to trace till I lost hope.

The data is graphs that are created using PyTorch Geometric. I made sure that the data is actually valid and there are no problems with them. Each graph is 18 nodes, with 18 features per node. The graphs are sparse, the number of ideas differ from one graph to another. The edges of the graphs are weighted.

The full code along with data is available here on Collab for your convenience.

In case you prefer the code to be here.

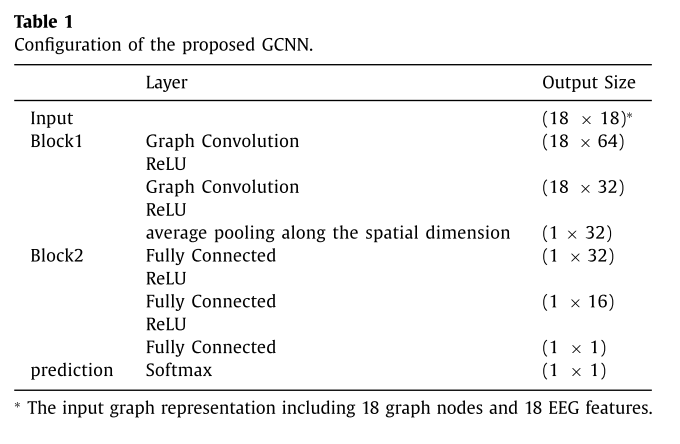

The required model to build

class GCNModel(nn.Module):

def __init__(self):

super(GCNModel, self).__init__()

# Block1

self.conv1 = GCNConv(in_channels=18, out_channels=64) # Graph Convolution

self.relu1 = nn.ReLU()

self.conv2 = GCNConv(in_channels=64, out_channels=32) # ReLU Graph Convolution

self.relu2 = nn.ReLU()

self.pool = global_mean_pool

# Block2

self.fc1 = nn.Linear(32, 32) # Fully Connected

self.dropout1 = nn.Dropout(0.3) # Dropout layer with p=0.3

self.relu3 = nn.ReLU()

self.fc2 = nn.Linear(32, 16) # ReLU Fully Connected

self.dropout2 = nn.Dropout(0.3) # Dropout layer with p=0.3

self.relu4 = nn.ReLU()

self.fc3 = nn.Linear(16, 1) # ReLU Fully Connected

self.softmax = nn.Softmax(dim=1) # Softmax

def forward(self, data):

x, edge_index, edge_attr, batch = data.x, data.edge_index, data.edge_attr, data.batch

# Block1

x = self.conv1(x, edge_index, edge_attr) # Pass edge_attr to the convolution

x = self.relu1(x)

# ReLU Graph Convolution

x = self.conv2(x, edge_index, edge_attr) # Pass edge_attr to the convolution

x = self.relu2(x)

# Average pooling along the spatial dimension

x = self.pool(x, batch)

# Block2 Fully Connected

x = self.fc1(x)

x = self.dropout1(x) # Apply dropout

x = self.relu3(x)

# ReLU Fully Connected

x = self.fc2(x)

x = self.dropout2(x) # Apply dropout

x = self.relu4(x)

# ReLU Fully Connected

x = self.fc3(x)

# Softmax

x = self.softmax(x)

return x

The training, validation and testing procedure:

# Instantiate GCNModel

model = GCNModel()

# Define optimizer and learning rate

optimizer = optim.Adam(model.parameters(), lr=0.001, weight_decay=0.0001)

# Define your loss function

criterion = nn.CrossEntropyLoss()

# Define the data loaders

train_loader = DataLoader(data_train, batch_size=64, shuffle=True)

val_loader = DataLoader(data_val, batch_size=64, shuffle=False)

test_loader = DataLoader(data_test, batch_size=64, shuffle=False)

# Training loop

def train(epoch):

model.train()

for data in train_loader:

optimizer.zero_grad()

output = model(data)

loss = criterion(output, data.y.view(-1, 1).float())

loss.backward()

optimizer.step()

# Function to compute accuracy

def compute_accuracy(loader):

model.eval()

predictions = []

labels = []

with torch.no_grad():

for data in loader:

output = model(data)

predictions.extend(torch.argmax(output, axis=1).cpu().numpy()) # Use argmax for predicted labels

labels.extend(data.y.cpu().numpy())

accuracy = accuracy_score(labels, predictions)

return accuracy

# Training and validation

num_epochs = 50 # Train for 50 epochs

for epoch in range(1, num_epochs + 1):

train(epoch)

train_acc = compute_accuracy(train_loader) # Compute accuracy on training data

val_acc = compute_accuracy(val_loader)

print(f"Epoch [{epoch}/{num_epochs}], Train Acc: {train_acc:.4f}, Val Acc: {val_acc:.4f}")

# Testing

test_acc = compute_accuracy(test_loader)

print(f"Testing Accuracy: {test_acc:.4f}")

Output:

Epoch [1/50], Train Acc: 0.4471, Val Acc: 0.4470

Epoch [2/50], Train Acc: 0.4471, Val Acc: 0.4470

.

.

.

Epoch [50/50], Train Acc: 0.4471, Val Acc: 0.4470

Thank you for your help