Hi,

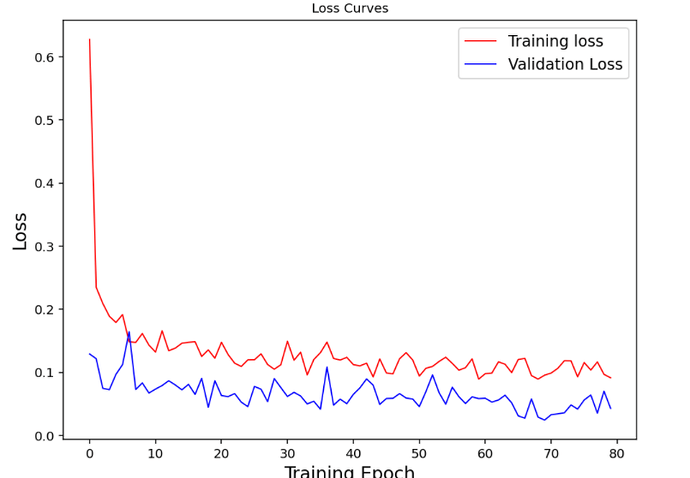

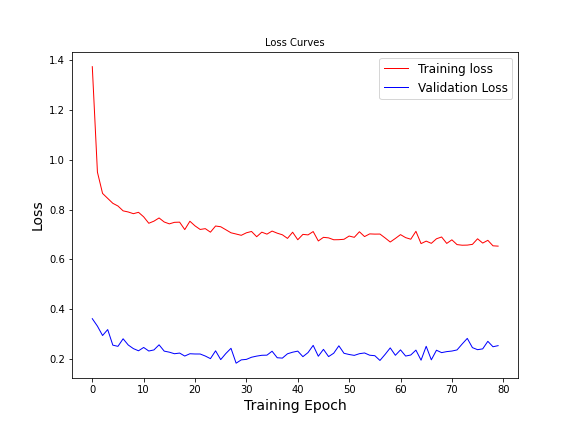

39 output classes curves:

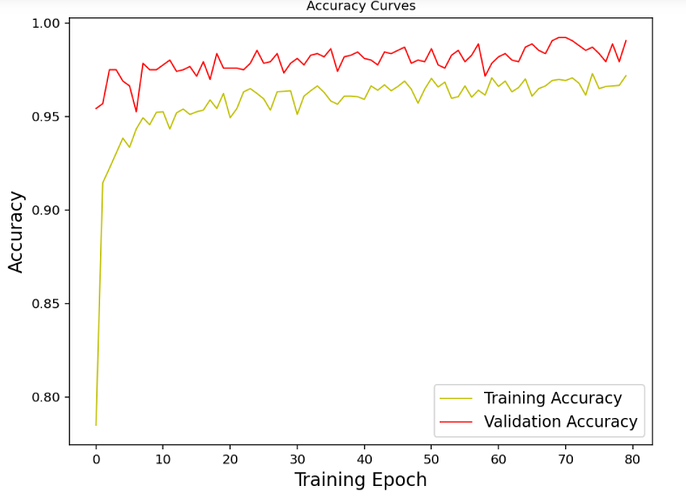

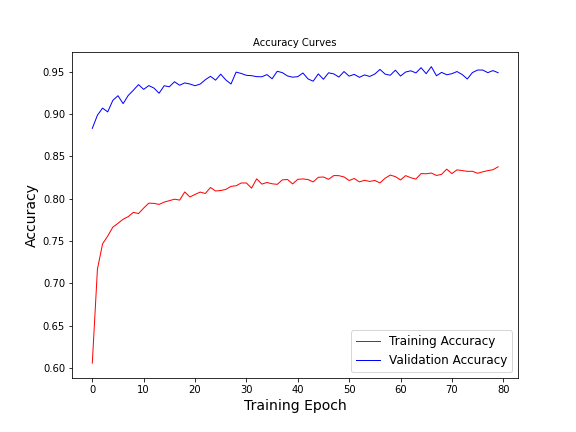

5 output classes curves:

Looking at the curves above, you can find the training/validation loss and accuracy curves for 5 output classes produced a better result than the 39 output classes. There is a considerable gap between all the training and validation curves. In addition, validation results are higher than the training results. What does it indicate exactly? I want to know whether these curves are a good fit and how to find the convergence point.

Even after increasing the epoch, the result produced is not improving much for the 39 classes model. I’d appreciate it if someone can help me to improve the results further by hyperparameter tuning or using some other popular techniques. I’m aiming to get accuracy over 98% at least for the 39 output classes model.

Parameter details used in training the model are below:

- The model uses VGG16 pre-trained architecture with 39 output classes (open to change to the architecture that produces good results).

- Set Learning rate = 0.001 and optimizer = Adam .

- Batch size = 64.

- Data transforms Performed - Rotation=30, Resize, Horizontal flip=0.5, Normalize, ToTensor.

- Every class has image data ranging between 1000 - 1200. Dataset split into 60:20:20 (Train/Val/Test).

- Dropout=0.5.

Please let me know of any additional details required to improve the model performance.

TIA.