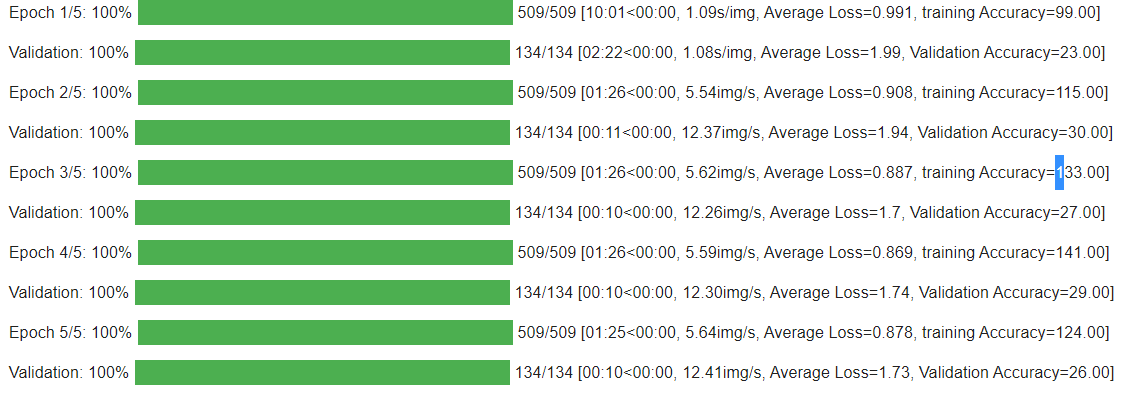

This is my code for traning and validation loop. Please help me with this issue.

model = get_pretrained_model('inceptionv3')

#print(model.forward)

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = model.to(device)

criterion = torch.nn.CrossEntropyLoss()

n_class=5

optimizer = torch.optim.Adam(model.parameters())

scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer, 'min' if n_class > 1 else 'max', patience=2)

#training and validation

totEpochs = 5

for ep in range(totEpochs):

# Training loop

epoch_loss = 0

train_acc=0

valid_acc=0

with tqdm(total=len(trainset), desc=f'Epoch {ep + 1}/{totEpochs}', unit='img') as pbar:

for batch in trainLoader:

img = batch['image'].to(device)

label = batch['label'].to(device)

#depth = batch['depth']

net_pred = model(img)#,depth.unsqueeze(1))

loss = criterion(net_pred[0], label)

epoch_loss += loss.item()

pbar.set_postfix(**{'Loss (Batch)': loss.item()})

optimizer.zero_grad()

loss.backward()

nn.utils.clip_grad_value_(model.parameters(), 0.1)

optimizer.step()

# Calculate training accuracy

pred = torch.argmax(net_pred[0], dim=1)

correct_tensor = pred.eq(label)

# Need to convert correct tensor from int to float to average

accuracy = torch.mean(correct_tensor.type(torch.FloatTensor))

# Multiply average accuracy times the number of examples in batch

train_acc += accuracy.item() * img.size(0)

pbar.update(img.shape[0])

pbar.set_postfix(**{'Average Loss': epoch_loss/len(trainset), 'training Accuracy': f'{train_acc:.2f}%'})

#pbar.set_postfix(**{'training Accuracy': f'{train_acc:.2f}%'})

# validation loop

val_loss = 0

with tqdm(total=len(valset), desc=f'Validation', unit='img') as pbar:

for batch in validLoader:

with torch.no_grad():

img = batch['image'].to(device)

label = batch['label'].to(device)

#depth = batch['depth']

net_pred = model(img)#,depth.unsqueeze(1))

loss = criterion(net_pred[0], label)

val_loss += loss.item()

pbar.set_postfix(**{'Loss (validation)': loss.item()})

#calculate validation aaccuracy

pred = torch.argmax(net_pred[0], dim=1)

correct_tensor = pred.eq(label)

accuracy = torch.mean(correct_tensor.type(torch.FloatTensor))

# Multiply average accuracy times the number of examples

valid_acc += accuracy.item() * img.size(0)

pbar.update(img.shape[0])

scheduler.step(val_loss / len(valset))

pbar.set_postfix(**{'Average Loss': val_loss / len(valset), 'Validation Accuracy': f'{valid_acc:.2f}%'})

#pbar.set_postfix(**{'Validation Accuracy': f'{valid_acc:.2f}%'})

#print(f'\t\tTraining Accuracy: {train_acc:.2f}%\t Validation Accuracy: {valid_acc:.2f}%')