Hi,

I’m using semi-supervised GAN which combined with WGAN-GP to train the MPIE database to generate faces. But I found that the Wasserstein loss of the generator continues to rise, is this divergence?

What situation will cause this kind of thing?

Hi,

I’m using semi-supervised GAN which combined with WGAN-GP to train the MPIE database to generate faces. But I found that the Wasserstein loss of the generator continues to rise, is this divergence?

What situation will cause this kind of thing?

Hi,

Could you specify what do you mean by “Wasserstein loss of the genertor”? And how can the generator loss keep changing (increasing) while the discriminator one does not change? Could you also provide information about your learning procedure?

From my experience and my understanding of what you call “Wasserstein loss of the genertor”, what matters for WGAN is the difference between the “Wasserstein loss of the genertor” and the “Wasserstein loss of the discriminator”. Those two losses considered separately do not really mean a lot.

One very surprising thing in your plots to me is that while training is not over for the generator, it seems like discriminator loss is very stable at the end your your plot.

Sorry to reply so late.

I’m training discriminator with supervised ID/Pose label + WGAN-GP, the loss seems to be decrease stable. Due to generator changes slow, I update G more frequently than D, so I train 4 steps to optimizing G and 1 for D.

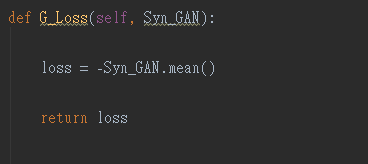

Follow the training strategy above, the synthesized images seems okay but generator loss (From WGAN-GP, the code shown below) keep increasing.Because in my mind, Wasserstein distance should be able to measure the distance between Real/Fake images, G-Loss keep increasing will make me confused.

Does this mean anything special? Or what is the solution to this situation?

Thanks for all your help!