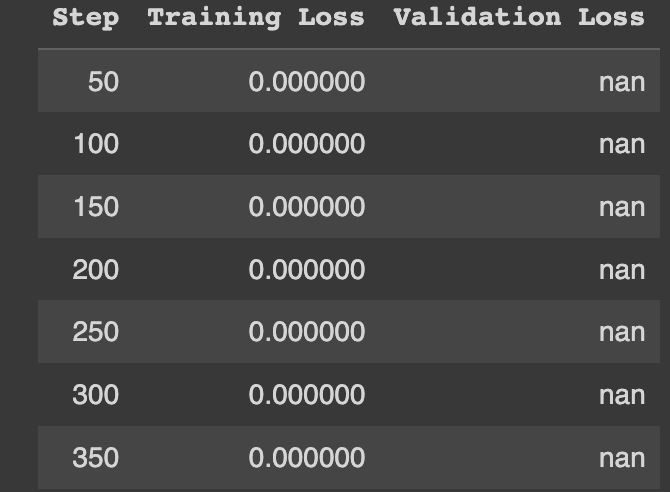

Hello, I am training a model, but the training loss is zero and the validation loss is nan. This only happened when I switched the pretrained model from t5 to mt5.

I don’t know what’s wrong because it was working with t5.

args = Seq2SeqTrainingArguments(

model_dir,

evaluation_strategy="steps",

eval_steps=100,

logging_strategy="steps",

logging_steps=100,

save_strategy="steps",

save_steps=200,

learning_rate=4e-5,

per_device_train_batch_size=batch_size,

per_device_eval_batch_size=batch_size,

weight_decay=0.01,

save_total_limit=3,

num_train_epochs=6,

predict_with_generate=True,

fp16=True,

load_best_model_at_end=True,

)

trainer = Seq2SeqTrainer(

model_init=model_init,

args=args,

train_dataset=tokenized_dataset["train"],

eval_dataset=tokenized_dataset["validation"],

data_collator=data_collator,

tokenizer=tokenizer,

)