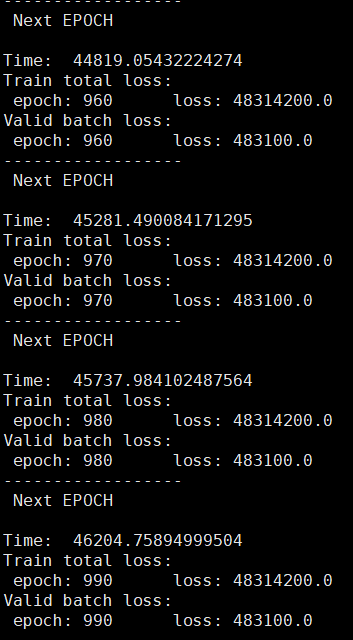

I’m running an embedding model. I have a embedding model that I am trying to train where the training loss and validation loss does not go down but remain the same during the whole training of 1000 epoch. while i’m also using: lr = 0.001, optimizer=SGD.

Can you post the code here?

Brother How I upload it? Its huge and multiple team. I didn’t have access some of the modules.

I too faced the same problem, the way I went debugging it was:

You can check your codes output after each iteration,

if the output is same then there is no learning happening.

maybe some of the parameters of your model which were not supposed to be detached might have got detached.

Check the code where you pass model parameters to the optimizer and the training loop where ‘optimizer.step()’ happens. Also see if the parameters are changing after every step.

hi,are you solve the prollem? I have met the same problem with you!

@111179 Yeah I was detaching the tensors from gpu to cpu before the model starts learning. So in that case the optimizer and the learning rate does affect anything. What particularly your model is doing?

The training loss and validation loss doesn’t change, I just want to class the car evaluation

use dropout between layers. After passing the model parameters use optimizer.step() to evaluate it in each iteration (the parameters should changing after each iteration)

yep,I have already use optimizer.step(), can you see my code? Thank you.