Hey everyone, I encountered a pretty strange situation during training. It was quite unexpected and left me feeling a bit perplexed.

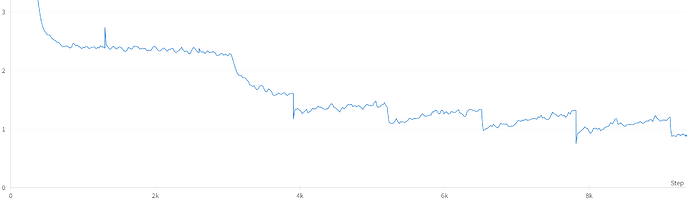

In my experiments, I’m training my model with a simple nn.CrossEntropy criterion and AdamW optimizer. At the beginning of training, everything seems normal. But at the subsequent epochs, the training loss curve looks strange, as shown below:

There are two strange characteristics:

1. The loss value slowly increases within an epoch.

2. The loss value suddenly drops at the interface between two epochs.

BTW, for the loss value in the loss curve, we smooth it over the most recent 50 steps (within an epoch), so I think the loss value smoothing does not cause it. And my dataloader uses suffle=True during training.

I checked previous similar discussions, like Strange behavior with SGD momentum training - PyTorch Forums, but it doesn’t work for my situation.

Thanks.

P.S.

Due to the large amount of code in my project, I can’t provide all the code in this forum. Here, I try to give the code of training one epoch in my project. However, the code cannot be run directly but is only used as a pseudo-code reference.

model.train()

metrics = Metrics() # save metrics

optimizer.zero_grad() # init optim

last_timestamp = TPS.timestamp()

for i, batch in enumerate(dataloader):

id_infos, last_frame_detections = batch["id_infos_tensor"], batch["last_frame_detections_tensor"]

last_frame_id_predicts, last_frame_masks = model(

id_infos, last_frame_detections

)

loss = criterion(

predicts=last_frame_id_predicts,

labels=last_frame_detections["id_label"],

predict_masks=last_frame_masks

)

metrics["id_loss"].update(loss.item())

loss /= config["ACCUMULATE_STEPS"]

loss.backward()

if (i + 1) % config["ACCUMULATE_STEPS"] == 0:

optimizer.step()

optimizer.zero_grad()