Hi all,

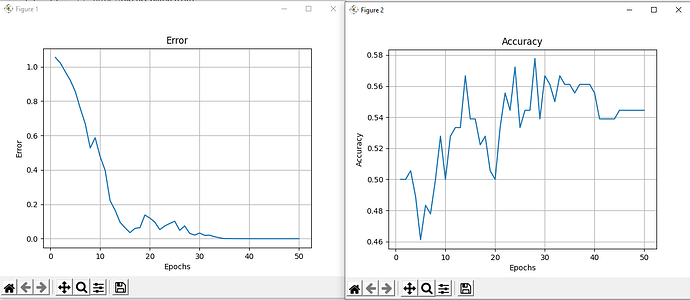

I’m trying to get started with Pytorch by creating my own CNN model to classify dogs and cats. I have 300 photos of each class and a 70/30 split for training/validation. I used OpenCV to resize the RGB images to 50x50 and then used one hot vector to label them. I then saved them into a tensor for easy access. The issue I’m having is that my loss for each training epoch is decreasing but my validation accuracy is not improving. The classification problem is simple and the model is pretty standard. There doesn’t seem to be anything wrong with my set up so I don’t quite understand why my accuracy is not improving. Does anyone have any idea as to why this behavior is happening?

Thanks

from torchvision import models

from tqdm import tqdm

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

import numpy as np

import matplotlib.pyplot as plt

import math

import time

import cv2

class Model(nn.Module):

def __init__(self, channel=1, imageSize=128):

super().__init__()

# Create a 4 layer convolutional network with batch normalisation

self.imageSize = imageSize

self.channel = channel

self.conv1 = nn.Conv2d(self.channel, 24, 5, stride=1, padding=2)

self.conv2 = nn.Conv2d(24, 28, 5, stride=1, padding=2)

self.conv3 = nn.Conv2d(28, 32, 5, stride=1, padding=2)

self.conv4 = nn.Conv2d(32, 36, 5, stride=1, padding=2)

self.bn1 = nn.BatchNorm2d(24)

self.bn2 = nn.BatchNorm2d(28)

self.bn3 = nn.BatchNorm2d(32)

self.bn4 = nn.BatchNorm2d(36)

# Use dummy data to find the output shape of the final conv layer

x = torch.randn(self.channel,self.imageSize,self.imageSize).view(-1,self.channel,self.imageSize,self.imageSize)

self._to_linear = None

self.convs(x)

# 3 Dense layers (input, output and a hidden layer)

self.fc1 = nn.Linear(self._to_linear, 512)

self.fc2 = nn.Linear(512, 256)

self.fc3 = nn.Linear(256, 2)

def convs(self, x):

# Convolution --> Batch norm --> Activation func --> Pooling

x = F.max_pool2d(F.relu(self.bn1(self.conv1(x))), (2,2))

x = F.max_pool2d(F.relu(self.bn2(self.conv2(x))), (2,2))

x = F.max_pool2d(F.relu(self.bn3(self.conv3(x))), (2,2))

x = F.max_pool2d(F.relu(self.bn4(self.conv4(x))), (2,2))

if self._to_linear is None: # Determine the number of output neurons of the last convolutional layer.

self._to_linear = x[0].shape[0]*x[0].shape[1]*x[0].shape[2] # This will be the input to the first fully connected layer

print("Final conv layer output is: ", x.shape)

return x

def forward(self,x):

x = self.convs(x)

x = x.view(-1, self._to_linear) # Flatten the convolutional layer output before feeding it in the dense layer

# Apply the activation function and feed the data in the dense layers

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = F.softmax(self.fc3(x), dim=1)

return x

def Training():

device = torch.device("cuda:0")

CNNModel = Model(channel=3, imageSize=50).to(device)

print(CNNModel)

optimizer = optim.Adam(CNNModel.parameters(), lr=0.001)

lossFunction = nn.MSELoss()

# Load the training and validation images/labels

trainDataX = torch.load('C:\\Users\\louis\\OneDrive\\Desktop\\NN\\TrainingImages.pt')

trainDataY = torch.load('C:\\Users\\louis\\OneDrive\\Desktop\\NN\\TrainingLabels.pt')

testDataX = torch.load('C:\\Users\\louis\\OneDrive\\Desktop\\NN\\TestingImages.pt')

testDataY = torch.load('C:\\Users\\louis\\OneDrive\\Desktop\\NN\\TestingLabels.pt')

batchSize = 128

epochs = 50

epoch_loss = np.zeros((epochs+1,1)) # Used to track total loss for each epoch

epoch_acc = np.zeros((epochs+1,1)) # Used to track accuracy for each epoch

for epoch in tqdm(range(epochs)):

batch_loss = np.zeros((int(len(trainDataX))+1,1)) # Used to store the loss for each batch

for i in range(0, len(trainDataX), batchSize):

batchX = trainDataX[i:i+batchSize]

batchY = trainDataY[i:i+batchSize]

CNNModel.zero_grad()

output = CNNModel(batchX.to(device))

loss = lossFunction(output, batchY.to(device))

loss.backward()

optimizer.step()

batch_loss[i,:] = float(loss) # Store the loss for this batch

epoch_loss[epoch+1,:] = np.sum(batch_loss) # Store the total loss for this epoch

epoch_acc[epoch+1,:] = Testing(testDataX, testDataY, device, CNNModel) # Store the accuracy for this epoch

# Plot the training loss and accuracy

ceFig, ceAxes = plt.subplots()

ceAxes.set_title("Error")

ceAxes.grid(True, which='both')

ceAxes.plot(np.arange(1,epochs+1),epoch_loss[1:epochs+1,0])

ceAxes.set_xlabel('Epochs')

ceAxes.set_ylabel('Error')

accFig, accAxes = plt.subplots()

accAxes.set_title("Accuracy")

accAxes.grid(True, which='both')

accAxes.plot(np.arange(1,epochs+1),epoch_acc[1:epochs+1,0])

accAxes.set_xlabel('Epochs')

accAxes.set_ylabel('Accuracy')

plt.show()

def Testing(testDataX, testDataY, device, CNNModel):

correct = 0

total = 0

with torch.no_grad():

for i in range(len(testDataX)):

realClass = torch.argmax(testDataY[i]).to(device)

netOut = CNNModel(testDataX[i].view(-1,3,50,50).to(device))

predictedClass = torch.argmax(netOut)

if predictedClass == realClass:

correct +=1

total += 1

return correct/total