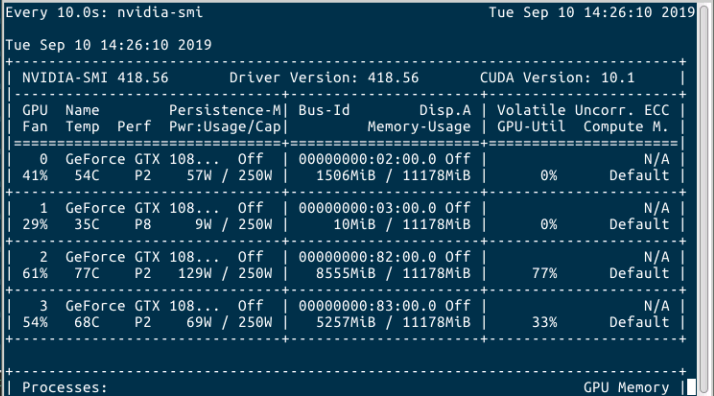

When I was training my model using multi-gpus, I set the batch_size=8, and used two gpus. This setting cannot make the best of my machine(4 GPUs and each with memory of 12G), however, if I set the batch_size bigger or use more GPUs, it would raise exception(GPU out of memory). It seems like that, the first GPU takes more assignment, so when I set the batch_size bigger or used more GPUs, the first GPU was out of memory. How can I fix it?

The image show my GPU’s state. I used GPU 2 and 3.