Hello,

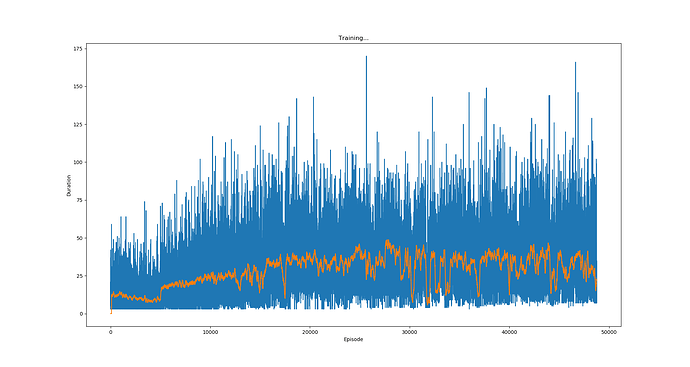

I have DQN training that I’m doing, and I’ve messed around with the hyperparameters a lot and the best results I have so far, it learns a bit until around 10k-20k episodes, then it stagnates, and the average top return it gets starts to stay the same. The settings I have are:

Batch size = 128

gamma = 0.999

eps start = 0.99

eps end = 0.05

eps decay = 100000

target update = 500

Learning rate = 0.0005

I’ve tried many settings, decaying till later, different learning rates, keeping exploration rate constant. I realize the exploration rate is still low at 10k episodes if it decays at 100k, however it is weird that after around 10k and 20k, suddenly, it stays same and the average scores keep start to go up and down a lot, here’s a screenshot:

Any ideas?

So it seems to me like it already starts converging into something very quick even though it has high exploration rate still